In Short:

French company Mistral AI has launched two new AI models, Ministral 3B and 8B, marking the first anniversary of their previous model. These advanced models are designed for on-device use and can handle complex tasks while ensuring privacy. They excel in knowledge, reasoning, and efficiency, making them suitable for various applications, from translation to robotics. They can also work effectively alongside larger models.

Mistral AI Launches New Models

French company Mistral AI has announced the release of its latest models, Ministral 3B and Ministral 8B, coinciding with the first anniversary of the Mistral 7B launch. These state-of-the-art models are specifically tailored for on-device computing and edge applications, establishing a new standard in the sub-10 billion parameter category, and are referred to as “les Ministraux.”

Features of Ministral 3B and 8B

The Ministral 3B and Ministral 8B models deliver enhanced performance in knowledge, commonsense reasoning, and function-calling efficiency. They are designed to be utilized or fine-tuned for a wide range of applications, including orchestrating agentic workflows and creating specialized task handlers.

Both models support a context length of up to 128k (currently 32k on vLLM), equipping them to proficiently manage complex tasks. Significantly, the Ministral 8B incorporates a unique interleaved sliding-window attention pattern, facilitating faster and more memory-efficient inference, as indicated by the company on Wednesday.

Privacy-Centric Applications

Focusing on local, privacy-first inference for critical applications, these models are particularly well-suited for on-device translation, offline smart assistants, local analytics, and autonomous robotics.

The company stated, “Les Ministraux were built to provide a compute-efficient and low-latency solution for these scenarios. From independent hobbyists to global manufacturing teams, les Ministraux deliver for a wide variety of use cases.”

Intermediary Role with Larger Models

Moreover, when deployed alongside larger models such as Mistral Large, the les Ministraux models act as efficient intermediaries for function-calling in multi-step workflows. This integration allows for low-latency input parsing and effective task routing.

The company added, “They can be fine-tuned to handle input parsing, task routing, and calling APIs based on user intent across multiple contexts with extremely low latency and cost.”

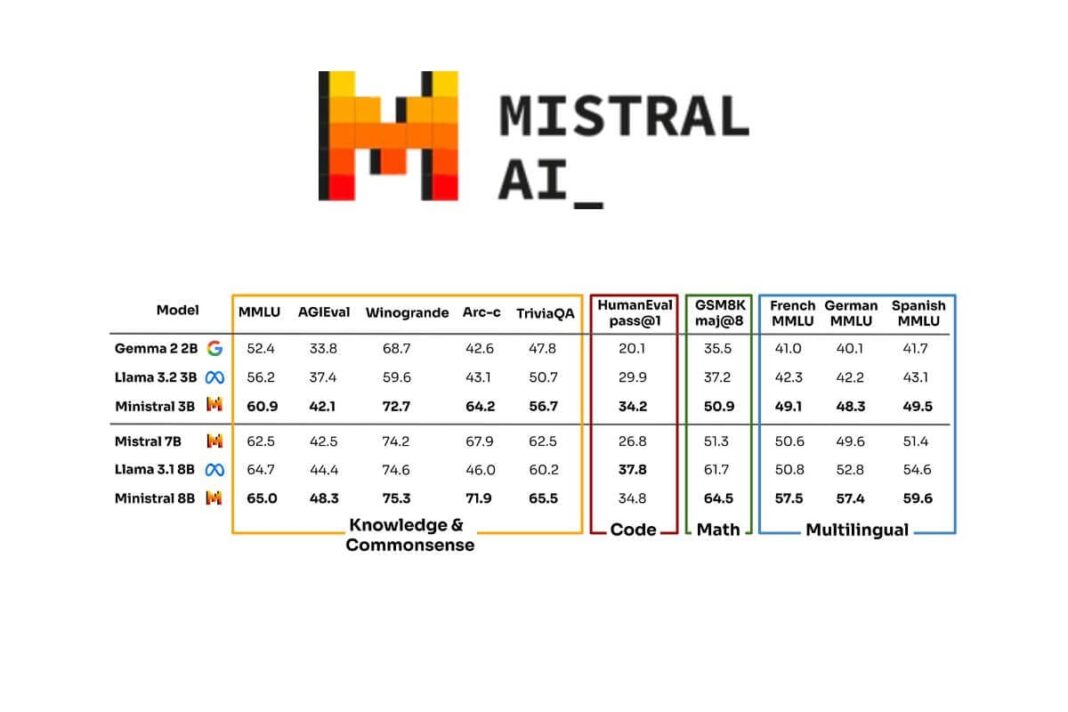

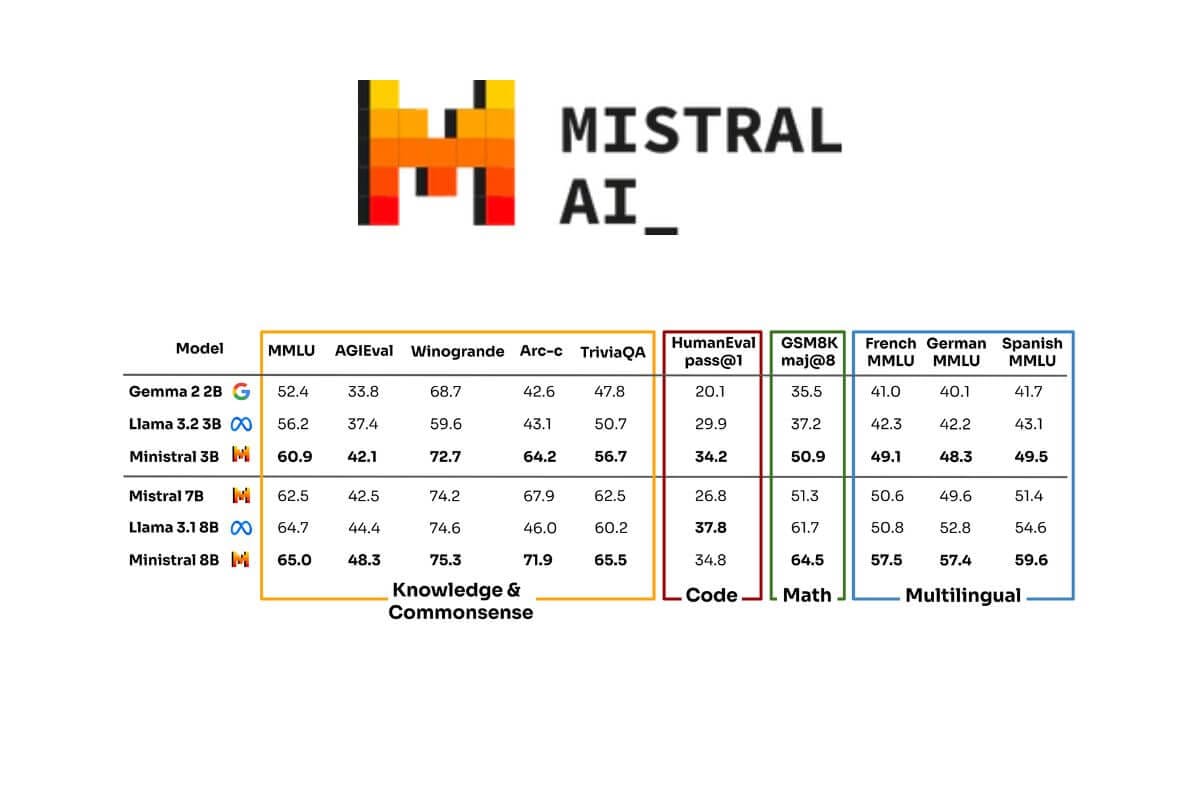

Performance benchmarks indicate that les Ministraux consistently excel over their competitors across various tasks, as Mistral has re-evaluated all models using its internal framework for equitable assessments.