In Short:

This week, a new book titled “Machine Learning Q and AI” was released by No Starch Press. The book covers 30 concepts that were not discussed in previous books and courses, focusing on different ways to use and finetune pretrained large language models (LLMs). Popular methods include a feature-based approach, in-context prompting, and updating a subset of model parameters for various tasks. The book aims to be a useful resource for those preparing for machine learning interviews.

New Book Release and AI Research Update

This week has been filled with developments, including exciting new AI research that I’ll be discussing in my usual end-of-month write-ups. Additionally, I am excited to announce the release of my new book, Machine Learning Q and AI, published by No Starch Press.

If you’ve been searching for a resource following an introductory machine learning course, this might be the one. I’m covering 30 concepts that were slightly out of scope for the previous books and courses I’ve taught, and I’ve compiled them here in a concise question-and-answer format (including exercises). I believe it will also serve as a useful companion for preparing for machine learning interviews.

Excerpt from the Book

Since the different ways to use and finetune pretrained large language models are currently one of the most frequently discussed topics, I wanted to share an excerpt from the book, in the hope that it might be useful for your current projects. Happy reading!

Ways to Use and Finetune Pretrained Large Language Models (LLMs)

The three most common ways to use and finetune pretrained LLMs include a feature-based approach, in-context prompting, and updating a subset of the model parameters.

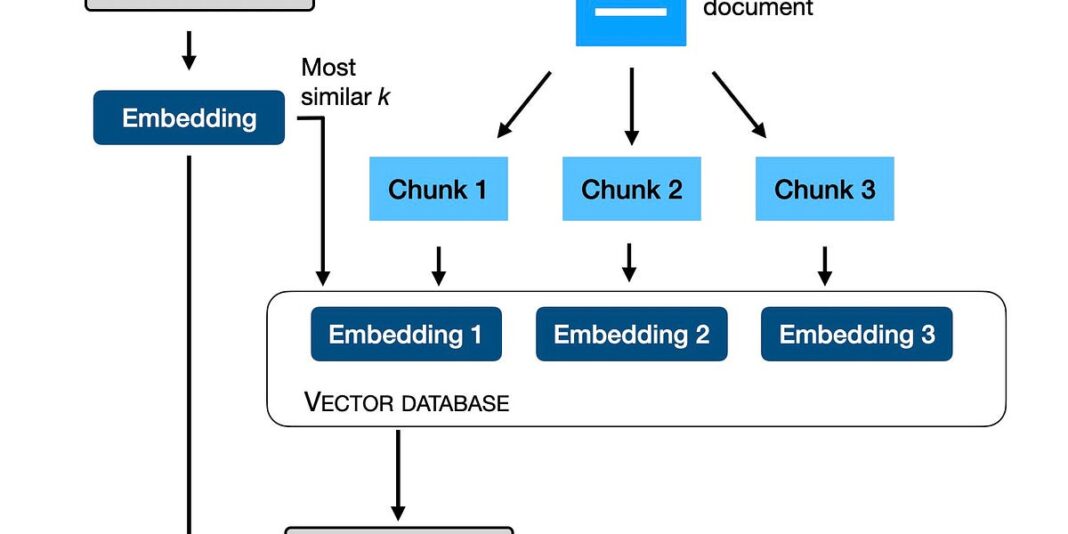

The Feature-Based Approach

In the feature-based approach, we load the pretrained model and treat it as a feature extractor without updating any parameters of the pretrained model. We then train a downstream model on these embeddings. This downstream model can be any model, but linear classifiers typically perform best due to the high-quality features extracted by pretrained transformers like BERT, GPT, and others.

Linear classifiers also have strong regularization properties, preventing overfitting with high-dimensional feature spaces. This approach is efficient since it doesn’t require updating the transformer model at all.

Finetuning

Finetuning can be done by updating only the output layers (finetuning I) or updating all layers (finetuning II). Finetuning I adds output layers to the LLM, while finetuning II updates all layers via backpropagation.

These methods provide different trade-offs in terms of computational efficiency and performance.

In-Context Learning

LLMs like GPT-2 and GPT-3 popularized in-context learning, which aims to provide examples of a task within the input or prompt itself, enabling the model to generate appropriate responses without further adapting its parameters. In-context learning can be useful when labeled data for finetuning is limited or unavailable.

Conclusion

As research in adapting pretrained LLMs progresses, we can expect further advancements in utilizing these models for a wide range of tasks and domains. The flexibility and efficiency of pretrained LLMs continue to evolve, offering new opportunities and strategies for adaptation.

For more detailed information, you can refer to the papers and research mentioned in this excerpt. If you are interested in exploring more topics related to machine learning and AI, you can find my book on the publisher’s website, Amazon, and other bookstores.