In Short:

Large language models (LLMs) like GPT-4 are often overestimated in their reasoning abilities. MIT researchers found that these models struggle in unfamiliar tasks and scenarios, showing limited generalizability. The study suggests the need for greater adaptability and testing diversity for future LLMs. Understanding the models’ decision-making processes remains a challenge, and more research is needed to improve their capabilities. The study was presented at the North American Chapter of the Association for Computational Linguistics (NAACL).

When it comes to artificial intelligence, there is often more than meets the eye. Large language models (LLMs) have long been shrouded in mystery due to their immense size, intricate training methods, unpredictable behaviors, and elusive interpretability.

Research conducted by MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) recently delved into the inner workings of LLMs to explore how they perform on various tasks. The study uncovered valuable insights into the relationship between memorization and reasoning skills, revealing that the models’ reasoning abilities are frequently overestimated.

Examining Default Tasks vs. Counterfactual Scenarios

The study compared the performance of LLMs on “default tasks” – common tasks models are trained and tested on – with their performance on “counterfactual scenarios” – hypothetical situations diverging from default conditions. By tweaking existing tasks instead of creating entirely new ones, researchers pushed the models beyond their usual limits, using different datasets and benchmarks tailored to specific aspects of their capabilities.

Challenges Beyond the Norm

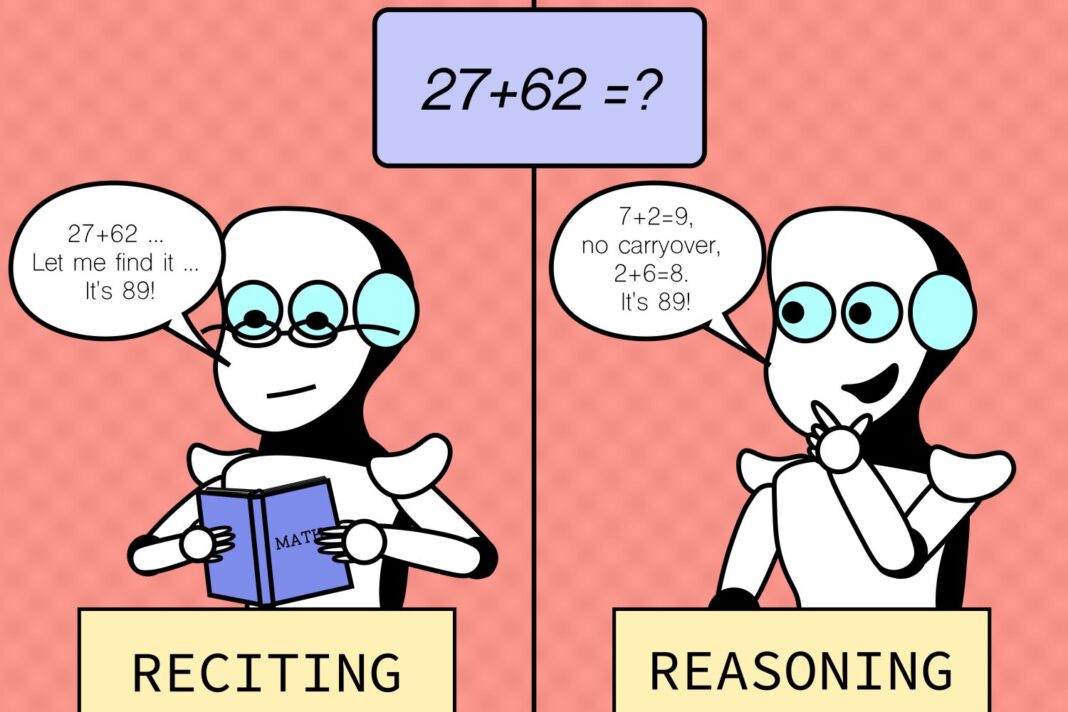

Results showed that while LLMs excel in familiar scenarios, they struggle when faced with unfamiliar challenges. This was evident in tasks ranging from arithmetic to chess, where the models exhibited limited ability to generalize to new situations. Their high performance on standard tasks often stemmed from memorization rather than true task abilities.

Looking Towards the Future

Lead researcher Zhaofeng Wu emphasized the importance of enhancing LLMs’ adaptability to broaden their application horizons. While the study provided valuable insights, further research is needed to explore a wider range of tasks and conditions, especially in real-world applications. Improving interpretability and understanding the models’ decision-making processes are also key areas of focus for future studies.

Insights and Challenges Ahead

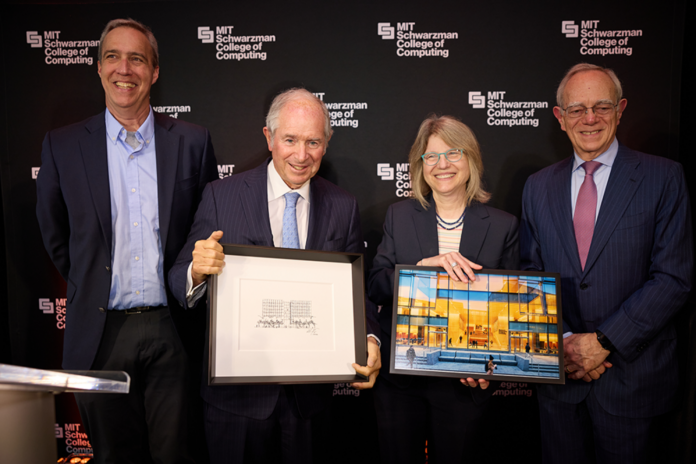

The research, supported by the MIT–IBM Watson AI Lab, the MIT Quest for Intelligence, and the National Science Foundation, was presented at the North American Chapter of the Association for Computational Linguistics (NAACL). Despite the progress made in understanding LLMs, there are still lingering questions about their ability to generalize to unseen tasks. The study’s findings shed light on the limitations of current models and pave the way for developing more robust and adaptable AI systems in the future.