In Short:

The article discusses advancements in training large language models (LLMs), focusing on four recent models: Alibaba’s Qwen 2, Apple’s AFM, Google’s Gemma 2, and Meta’s Llama 3. Each model employs unique pre-training and post-training techniques, emphasizing data quality and multi-stage training. While methodologies differ, rejection sampling is common, and all models show competitive performance in benchmarks like MMLU.

Advancements in Large Language Models

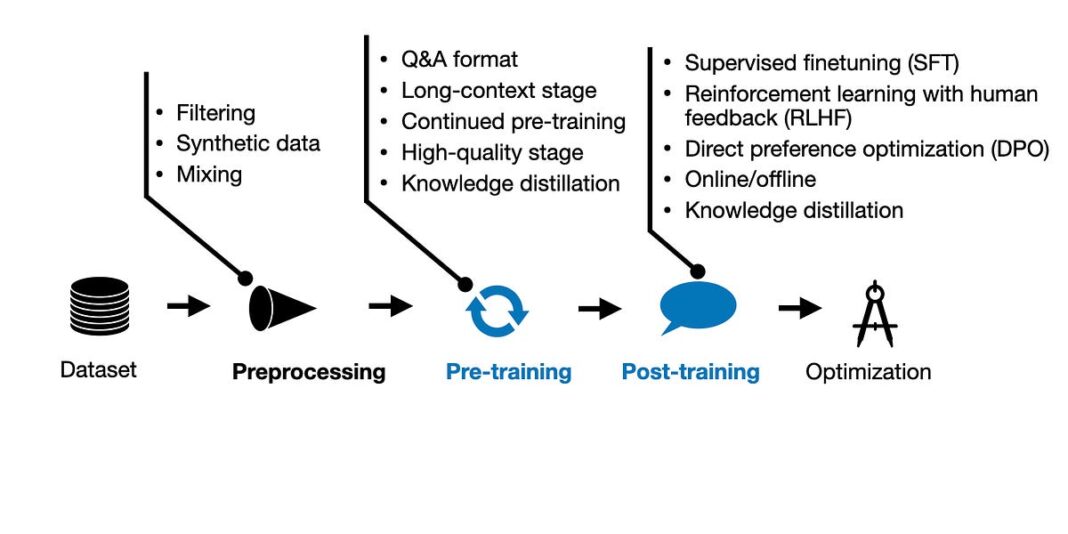

The development of large language models (LLMs) has evolved significantly from the initial GPT models to the sophisticated open-weight LLMs available today. Initially, the training process was strictly centered around pre-training, but it has recently expanded to encompass both pre-training and post-training. The post-training phase typically involves supervised instruction fine-tuning and alignment techniques, notably popularized by ChatGPT.

Current Landscape of Training Methodologies

Training methodologies have evolved since the introduction of ChatGPT. This article provides a comprehensive review of the latest advancements in both pre-training and post-training methodologies, especially those that have emerged in recent months.

A vast number of LLM papers are published monthly, proposing novel techniques and approaches. Nonetheless, examining the pre-training and post-training pipelines of the latest state-of-the-art models reveals what truly functions effectively in practice. Recently, four major LLMs have been released, each supported by relatively detailed technical reports:

- Alibaba’s Qwen 2

- Apple Intelligence Foundation Language Models (AFM)

- Google’s Gemma 2

- Meta AI’s Llama 3.1

Overview of Key Models

This article particularly emphasizes the pre-training and post-training pipelines of each model, ordered by their publication dates on arXiv.org, which coincidentally also aligns with their alphabetical order.

The development of LLMs remains a fervent interest of mine, and I invite readers to support my work by purchasing my books on machine learning. Your reviews on platforms like Amazon would be greatly appreciated.

Qwen 2: A Competitive LLM

Beginning with Qwen 2, this model family demonstrates competitive capabilities among the leading LLMs, despite its lesser popularity compared to open-weight models from Meta AI, Microsoft, and Google.

Qwen 2 offers five variants ranging from 0.5 billion to 72 billion parameters, including a Mixture-of-Experts model featuring 57 billion parameters where 14 billion are activated simultaneously. This family exhibits commendable multilingual capabilities, supporting up to 30 languages, complemented by an extensive vocabulary of 151,642 tokens.

Training efforts encompassed a staggering 7 trillion training tokens for models with larger parameters. Notably, the 0.5 billion parameter model was trained on 12 trillion tokens, highlighting an emphasis on improving data filtering pipelines and data mixing.

In terms of post-training, Qwen 2 utilized a two-phase methodology including supervised instruction fine-tuning (SFT) and direct preference optimization (DPO).

Apple’s AFM Models

The technical paper detailing Apple’s Intelligence Foundation Models (AFM) introduces two models tailored for deployment on Apple devices. Both models feature dense architectures, with one specifically designed for on-device use and the other configured for server application.

Pre-training for the AFMs followed a three-stage process designed to prioritize data quality over quantity, deploying techniques such as web-crawl data down-weighting and context-lengthening.

Google’s Gemma 2

Moving on to Google’s Gemma 2, this set of models aims to enhance LLM capabilities without necessitating a substantial increase in training dataset sizes. They utilize knowledge distillation throughout both pre-training and post-training processes, leading to effectively smaller, yet robust LLMs.

Meta’s Llama Models

The recent release of Meta’s Llama 3.1 model, along with substantial updates to its earlier variants, focuses on traditional training methods rather than architectural innovations. Trained on an extensive 15.6 trillion tokens, the model introduces new vocabulary size and filtering techniques to optimize performance.

Insights and Conclusions

The comparative exploration of these four models—Qwen 2, AFMs, Gemma 2, and Llama 3—reveals diverse methodologies in pre-training and post-training phases. Notably, each model has adopted a multi-stage pre-training pipeline, indicating a trend towards prioritizing quality alongside quantity.

Despite facing various unique challenges, these models share a commitment to refining their approach, aligning themselves closer to user preferences through various preference-tuning algorithms.

Support and Contributions

Ahead of AI represents a personal endeavor, devoid of direct compensation. Your support through book purchases or thoughtful reviews would be invaluable in sustaining this initiative. Thank you for your continued interest and engagement!