In Short:

Researchers from MIT and the MIT-IBM Watson AI Lab have devised a new navigation method that uses language-based representations rather than visual representations for robots. By converting visual observations into text descriptions, a large language model can efficiently generate synthetic training data and bridge the gap between simulated and real-world environments. While the approach may lose some information captured by visual models, it enhances an agent’s ability to navigate by combining language with vision-based methods.

Researchers from MIT and the MIT-IBM Watson AI Lab have developed a novel navigation method for AI agents to help robots complete complex tasks using a combination of visual observations and language processing.

Solving Complex Tasks with Language

The traditional approach of using multiple hand-crafted machine-learning models for different parts of a navigation task, which require a large amount of human effort and expertise, can be challenging. To address this, the researchers introduced a method that converts visual representations into language pieces and feeds them into a single language model to handle all steps of the task efficiently.

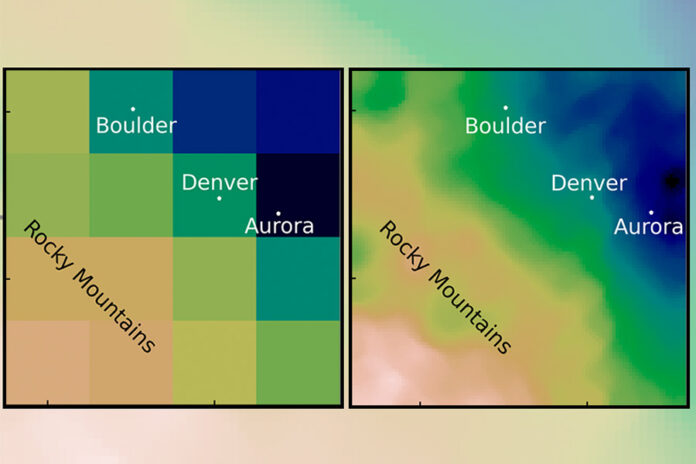

Instead of encoding visual features as visual representations, which can be computationally intensive, the new method generates text captions that describe the robot’s perspective. By integrating language-based instructions with visual signals, the researchers observed improved navigation performance.

Lead author Bowen Pan explains, “By purely using language as the perceptual representation, ours is a more straightforward approach. We can generate a human-understandable trajectory for robots.”

Integration of Language Models

The researchers leveraged large language models to tackle the complex task of vision-and-language navigation. By using text descriptions of a robot’s visual observations, the language model could guide the robot through each step of the navigation process.

Templates were designed to present observation information to the model in a standard format, facilitating decision-making based on the robot’s surroundings. This approach not only simplifies the navigation process but also makes it easier to identify and address potential challenges during task execution.

Advantages of Language-based Navigation

While the new method may not outperform vision-based techniques, it offers several advantages. Text-based inputs require fewer computational resources, allowing for the rapid generation of synthetic training data. This approach bridges the gap between simulation and real-world scenarios, making it easier to identify task failures and improve overall performance.

Additionally, the use of language-based representations enhances human understanding and can be applied to various tasks and environments without significant modifications. However, it may not capture certain details captured by vision-based models, such as depth information.

Future research will focus on enhancing navigation-oriented captioning and exploring the spatial awareness capabilities of large language models. The research is partially funded by the MIT-IBM Watson AI Lab and aims to advance language-based navigation for robots.