In Short:

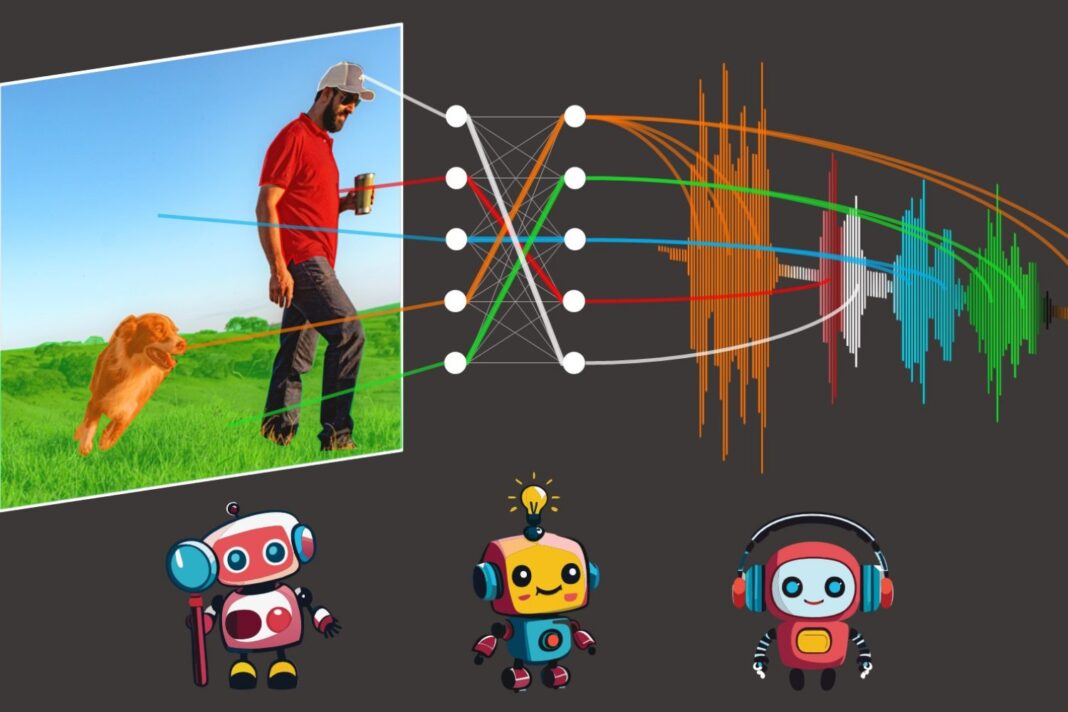

MIT PhD student Mark Hamilton and team developed DenseAV, a system that learns language from scratch using audio-video matching game. Inspired by child learning process, the system learns to understand language without any text input by comparing pairs of audio and visual signals through contrastive learning. DenseAV outperformed other models in tasks like identifying objects and sounds, paving the way for new language learning applications.

MIT PhD Student Creates System to Teach Machines Human Language

Mark Hamilton, an MIT PhD student in electrical engineering and computer science and affiliate of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), has developed a system called DenseAV that aims to teach machines human language from scratch. The inspiration for this project came from observing animals communicate, particularly a scene from the movie ‘March of the Penguins’, where Hamilton realized the potential of using audio and video to learn language.

Learning Language Without Text Input

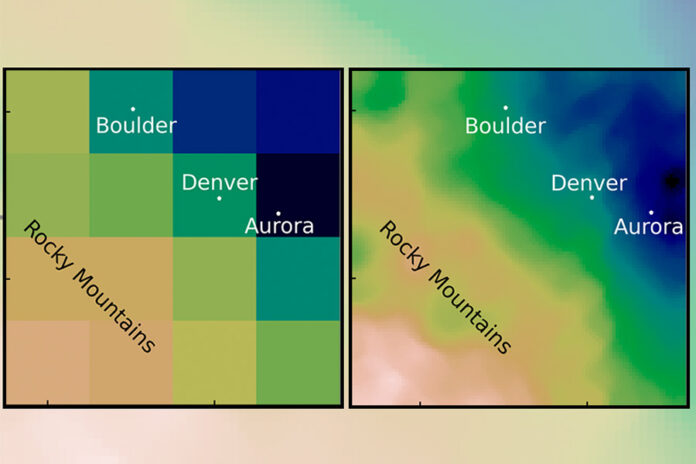

One of the challenges faced by the team was learning language without using pre-trained language models. To achieve this, DenseAV uses two main components to process audio and visual data separately, ensuring that the algorithm learns by comparing pairs of signals to discover important patterns of language itself.

Improving Audio-Video Matching Game

Hamilton and his colleagues trained DenseAV on AudioSet, a dataset with 2 million YouTube videos, and created new datasets to test the model. DenseAV outperformed other models in tasks like identifying objects from their names and sounds, showcasing its effectiveness in linking sounds and images.

Future Applications and Scaling Up

The team aims to create systems that can learn from massive amounts of video- or audio-only data, and potentially integrate knowledge from language models to improve performance. By observing the world through sight and sound, DenseAV could potentially learn data from any language, making it a versatile tool for various applications.

The work will be presented at the IEEE/CVF Computer Vision and Pattern Recognition Conference this month, with additional authors including experts from the University of Oxford and Google AI. The research was supported by the U.S. National Science Foundation and other institutions.