In Short:

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory have developed an automated system called “MAIA” that can interpret the inner workings of artificial intelligence models. MAIA can label components within vision models, remove irrelevant features, and detect biases to ensure fairness. The system outperformed baseline methods in interpreting individual neurons within different vision models. This approach could lead to better understanding and oversight of AI systems for safety and bias detection.

MIT Researchers Develop Automated AI System for Interpreting Neural Networks

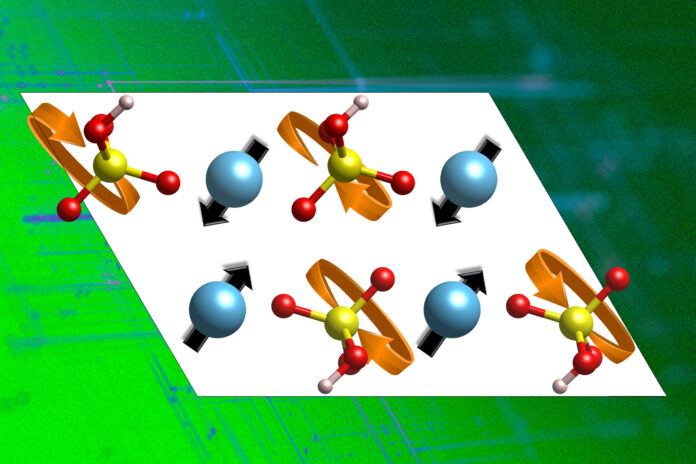

Artificial intelligence (AI) models are increasingly being integrated into various sectors such as health care, finance, education, transportation, and entertainment. Understanding how these models work is essential for auditing them for safety and biases. MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers have developed an automated system called MAIA (Multimodal Automated Interpretability Agent) to interpret artificial vision models.

Automated Interpretability Tasks

The researchers at MIT CSAIL have developed MAIA to automate a variety of neural network interpretability tasks, using a vision-language model backbone equipped with tools to experiment on other AI systems. The system aims to conduct interpretability experiments autonomously by generating hypotheses, designing experiments, and refining its understanding through iterative analysis.

Tamar Rott Shaham, an MIT postdoc at CSAIL, explains, “Our goal is to create an AI researcher that can conduct interpretability experiments autonomously. MAIA can generate hypotheses, design experiments to test them, and refine its understanding through iterative analysis.”

Key Tasks

MAIA is capable of performing three key tasks: labeling individual components inside vision models and describing visual concepts that activate them, cleaning up image classifiers by removing irrelevant features, and detecting hidden biases in AI systems to uncover potential fairness issues in their outputs.

Interpreting Neuron Behaviors

One of the tasks involves describing the concepts that a particular neuron inside a vision model detects. MAIA generates hypotheses to determine what drives the neuron’s activity, designs experiments to test each hypothesis, and evaluates its explanations of neuron behaviors. The system performs well in describing individual neurons in various vision models like ResNet, CLIP, and DINO.

Addressing Bias in Image Classifiers

MAIA can also detect biases in image classification systems by analyzing the probability scores of input images and finding misclassified images in specific classes. The system identified biases in image classifiers, such as misclassifying images of black labradors, indicating potential biases in the model.

Future Directions

The researchers plan to apply similar experiments to human perception, scaling up the process of designing and testing stimuli. Jacob Steinhardt from the University of California, Berkeley, notes, “Understanding neural networks is difficult for humans because they have hundreds of thousands of neurons, each with complex behavior patterns. MAIA helps bridge this by developing AI agents that can analyze neurons and report findings back to humans.”

The impact of the researchers’ work on understanding and overseeing AI systems could be significant in the future, paving the way for safer and more transparent AI technologies.