In Short:

Researchers from MIT and the MIT-IBM Watson AI Lab developed a technique to estimate the reliability of foundation models, which can offer incorrect information. The technique involves training multiple models, comparing their consistency, and aligning their representations. This approach helps to determine how reliable a model is for a specific task without the need for real-world testing and can be especially useful in settings with privacy concerns like healthcare.

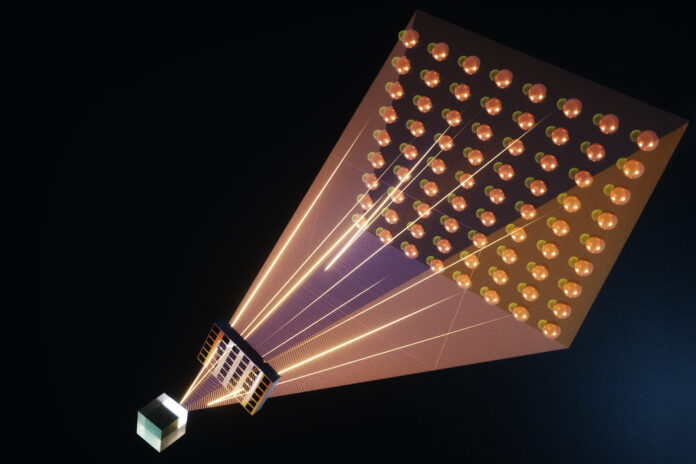

Foundation models are large-scale deep-learning models that have been pretrained on massive amounts of general-purpose, unlabeled data. These models are used for various tasks such as creating images or responding to customer inquiries.

Ensuring Reliability of Foundation Models

Researchers from MIT and the MIT-IBM Watson AI Lab have devised a technique to evaluate the reliability of foundation models before deploying them for specific tasks. By training a set of slightly different foundation models and assessing the consistency of their representations on the same test data points, they determine the model’s reliability.

Comparing their technique to existing methods, they found it to be more effective in capturing the reliability of foundation models across different classification tasks.

This method enables users to make informed decisions about using a particular model in a specific setting without the need to test it on real-world datasets. It is particularly useful in situations where datasets may be inaccessible due to privacy concerns, like in healthcare settings.

Senior author Navid Azizan, from MIT, highlights the importance of quantifying uncertainty in foundation models and the benefits of knowing when a model is wrong.

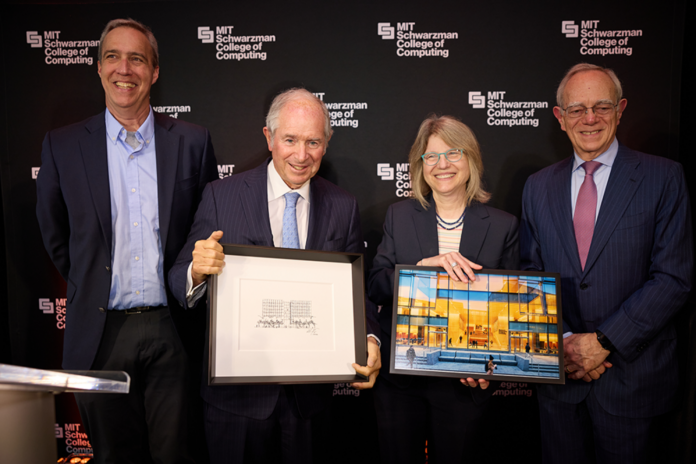

The research team includes lead author Young-Jin Park, a LIDS graduate student, Hao Wang from MIT-IBM Watson AI Lab, and Shervin Ardeshir from Netflix. Their work will be presented at the Conference on Uncertainty in Artificial Intelligence.

Counting Consensus

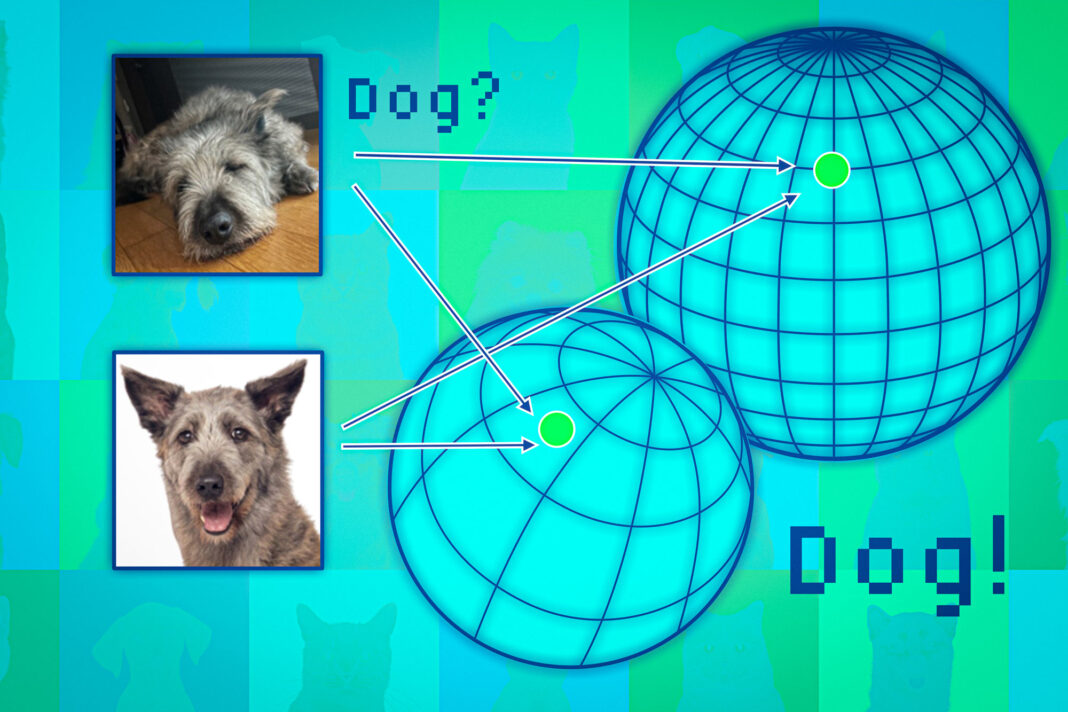

Unlike traditional machine-learning models, foundation models generate abstract representations instead of concrete outputs like “cat” or “dog” labels. The research team used an ensemble approach to train multiple models and determine reliability.

By leveraging the concept of neighborhood consistency, they compared abstract representations and aligned representations in a shared space to ensure reliability across various tasks.

Aligning Representations

The researchers mapped data points in a representation space and aligned representations using neighboring points to make them comparable. This approach proved more consistent and effective than traditional baselines across a wide range of classification tasks.

While the method may be computationally expensive due to training multiple large models, the researchers aim to explore more efficient approaches in the future.

Funding for this work is provided by the MIT-IBM Watson AI Lab, MathWorks, and Amazon.