In Short:

In 1994, Diana Duyser found the Virgin Mary’s image in a grilled cheese sandwich, selling it for $28,000. A new MIT study explores pareidolia, the phenomenon of seeing faces in random objects, through a dataset of 5,000 labeled images. The research reveals differences between human and AI perception, suggesting an evolutionary advantage in spotting faces which may improve face detection technology in various fields.

In 1994, Florida jewelry designer Diana Duyser famously preserved a grilled cheese sandwich, claiming it bore the image of the Virgin Mary. This sandwich later fetched an impressive $28,000 at auction. However, this incident raises questions about our understanding of pareidolia, the psychological phenomenon that causes individuals to perceive familiar patterns or faces in random objects.

The Study by MIT CSAIL

A recent study conducted by the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) presents a comprehensive human-labeled dataset containing 5,000 pareidolic images. This dataset significantly exceeds the scale of previous collections. The research team uncovered several intriguing findings about the perceptions of humans compared to machines concerning these illusory faces, suggesting an evolutionary link that could explain this visual phenomenon.

“Face pareidolia has long fascinated psychologists, but it’s been largely unexplored in the computer vision community,” stated Mark Hamilton, an MIT PhD student in electrical engineering and computer science and the lead researcher for this project. “We aimed to create a resource that could enhance our understanding of how both humans and AI systems interpret these illusory faces.”

Key Discoveries

The research team observed that AI models struggle to recognize pareidolic faces in the same manner that humans do. Interestingly, the researchers discovered that training algorithms to identify animal faces significantly improved their ability to detect pareidolic faces. This unexpected finding implies an evolutionary basis for our capacity to recognize animal faces—critical for human survival—which could also underlie our inclination to see faces in inanimate objects. In Hamilton’s words, “This suggests that pareidolia might stem from deeper instincts, such as swiftly identifying a concealed predator.”

Another noteworthy finding from the study is the characterization of a phenomenon dubbed the “Goldilocks Zone of Pareidolia.” According to William T. Freeman, an MIT professor and principal investigator, this refers to a specific visual complexity range where both humans and AI are most likely to recognize faces in non-facial objects. The research indicated that images that are either too simple or overly complex tend to be less effective for pareidolic recognition.

Methodology and Dataset Development

The research team utilized an innovative equation to model how both humans and algorithms detect these illusory faces. Their analysis revealed a distinct “pareidolic peak” where the likely detection of faces is most pronounced, indicating a balance in image complexity. This predicted “Goldilocks zone” was subsequently confirmed through experiments involving human subjects and AI detection systems.

The newly formed dataset, entitled Faces in Things, greatly surpasses the scale of prior studies that only included 20-30 stimuli. This expansive dataset enabled researchers to determine how advanced face detection algorithms were affected when fine-tuned on pareidolic faces, allowing for exploratory questions about the origins of face detection—an area impossible to delve into through human experimentation alone.

To compile this dataset, approximately 20,000 candidate images were sourced from the LAION-5B dataset, which were then meticulously labeled by human annotators. This extensive process involved outlining perceived faces and answering detailed questions related to each one’s characteristics. Hamilton acknowledged the immense effort involved, attributing part of the dataset’s success to the contributions of his mother, a retired banker, who dedicated considerable time to labeling images for the analysis.

Future Implications

The findings have potential implications for enhancing facial detection systems by minimizing false positives, which could be beneficial in fields such as self-driving technologies, human-computer interaction, and robotics. Additionally, understanding and manipulating pareidolia could aid in product design, leading to more appealing and less intimidating consumer products. “Imagine being able to automatically modify the design of a car or a child’s toy to make it appear friendlier,” noted Hamilton.

This research prompts further questions regarding the disparity between human perception and algorithmic interpretation of pareidolic cues and whether the phenomenon is advantageous or detrimental. Addressing these questions could unveil significant insights into the intricacies of the human visual system.

Looking Forward

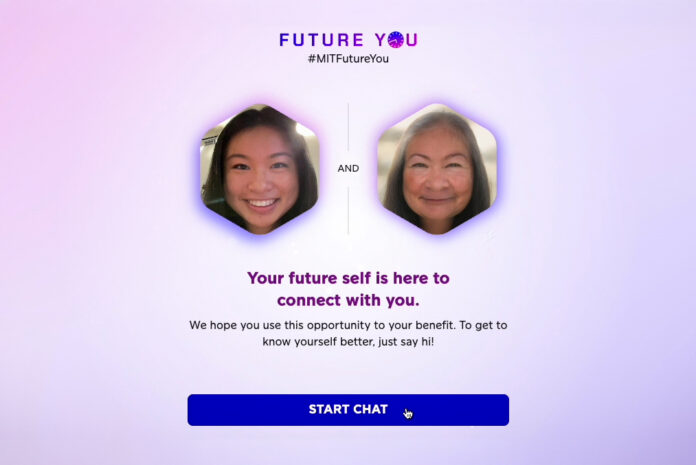

As researchers prepare to disseminate their dataset within the scientific community, they are also envisioning future projects that may involve training vision-language models to comprehend and interpret pareidolic faces, ultimately guiding AI systems towards more human-like engagement with visual stimuli.

In the words of Pietro Perona, the Allen E. Puckett Professor of Electrical Engineering at Caltech, “This is a delightful paper! It inspires thought and curiosity around the question: why do we perceive faces in objects?”

The research collaboration also includes contributions from Simon Stent, Ruth Rosenholtz, Vasha DuTell, Anne Harrington, and Jennifer Corbett, supported by the National Science Foundation, United States Air Force Research Laboratory, and MIT SuperCloud resources. Their findings will be presented this week at the European Conference on Computer Vision.