In Short:

This article discusses recent research in the field of instruction finetuning for large language models like LLMs. The focus is on a new method called Magpie that generates synthetic instruction-response pairs, leading to improved model performance. The article also covers the release of Google’s Gemma 2 models and Nvidia’s Nemotron-4 340B model. Additionally, it mentions several other interesting research papers that came out in June. Happy reading!

Recent LLM Research Roundup

Recent Research Highlights

A lot has happened last month in the world of Large Language Models (LLMs). Various tech giants like Apple, Nvidia, and Google made significant announcements. Let’s dive into some of the recent research focusing on instruction finetuning, a fundamental technique for training LLMs.

Research Topics Covered in this Article:

- A new method for generating data for instruction finetuning

- Instruction finetuning from scratch

- Pretraining LLMs with instruction data

- An overview of Google’s Gemma 2

- An overview of other interesting research papers from June

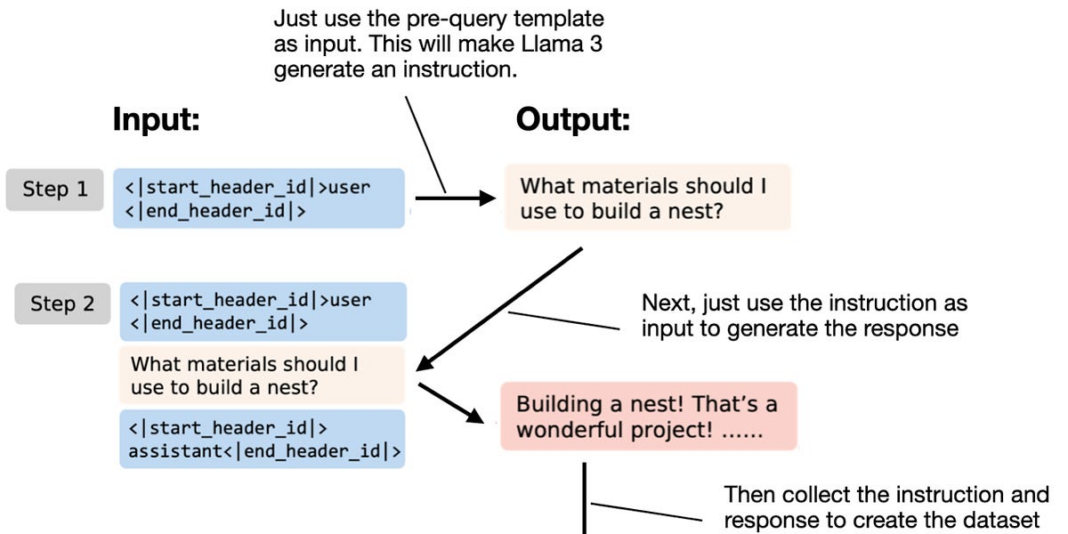

The Magpie Method

The Magpie method, shared in a recent paper, provides a novel approach for generating synthetic instruction data for LLMs finetuning. This method enables the creation of instruction datasets by prompting aligned LLMs with “Nothing,” a concept central to the methodology.

Key findings from the Magpie method:

- Generation of a high-quality dataset for instruction finetuning

- Improved performance of LLMs through instruction finetuning

Instruction Pre-Training Approach

Another significant paper introduces an instruction pre-training approach for LLMs. By including synthetic instruction-response pairs in the pretraining process, the researchers demonstrate improved efficiency and knowledge acquisition in LLMs.

Noteworthy points from the instruction pre-training study:

- Improved performance of LLMs trained from scratch with instruction pre-training

- Benefits of using synthetic instruction-response pairs in pretraining

Google’s Gemma 2 Release

Google’s Gemma 2 models have garnered attention for their innovation in developing relatively small and efficient LLMs. With key architectural choices like sliding window attention, grouped-query attention, and knowledge distillation, Gemma 2 models showcase advancements in LLM development.

Highlights of Gemma 2 models:

- Efficient design choices including sliding window attention and grouped-query attention

- Knowledge distillation in model training

Other Research Papers

In addition to the Magpie method, instruction pre-training, and Gemma 2 models, various other research papers in the field of Large Language Models have been published in June. These papers cover a wide range of topics and experiments, contributing to the ongoing advancements in the field.

Some notable research papers from June:

- …

- …

- …

Support the Author

The research roundup presented here is part of a personal passion project. To support the author, consider exploring their published books and sharing feedback through reviews on platforms like Amazon.

Thank you for your support!