In Short:

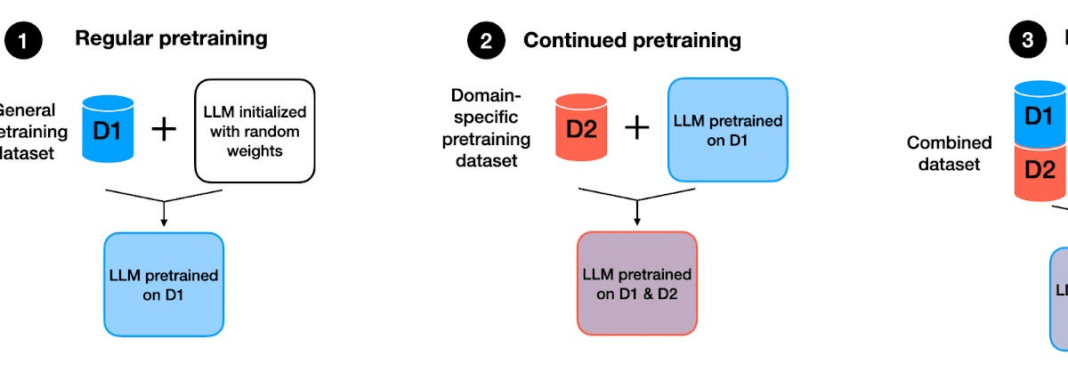

The latest in AI research includes the release of xAI’s Grok-1 model, the largest open-source model with 314 billion parameters, and reports of Claude-3 rivaling GPT-4. Detailed discussions of research papers cover strategies for continuing pretraining large language models (LLMs) and reward modeling for reinforcement learning alignment. The “Continued pretraining” method is highlighted as cost-effective, demonstrating good performance without retraining from scratch.

Latest Highlights in AI Research

It’s been another eventful month in AI research, with several significant announcements and developments across various models and papers.

New Open-Source Models and Breakthrough Performance

xAI has unveiled its Grok-1 model, boasting an impressive 314 billion parameters, making it the largest open-source model to date. Additionally, reports suggest that Claude-3 is on track to match or even surpass the performance of GPT-4. Other notable releases include Open-Sora 1.0 for video generation, Eagle 7B with a new RWKV-based model, Mosaic’s DBRX with 132 billion parameters, and AI21’s Jamba, a Mamba-based SSM-transformer model.

Focus on Research Papers

Due to limited detailed information on these models, discussions on research papers have taken center stage this month. One paper examines strategies for continual pretraining of LLMs to keep them updated with the latest information and trends, and another looks at reward modeling in reinforcement learning with human feedback, introducing a new benchmark for evaluation.

Continual Pretraining Strategies

A recent paper delves into the importance of continually pretraining LLMs with new data to update existing models and adapt them to new target domains without the need for retraining from scratch. The study compares different pretraining methods and highlights the efficacy of continued pretraining in maintaining performance while being more cost-effective.

Key Insights from Reward Modeling

Another significant paper introduces a benchmark for evaluating reward models used in reinforcement learning with human feedback. This benchmark evaluates the performance of both chosen and rejected responses, shedding light on the effectiveness of different reward models and the impact on downstream tasks.

Overall, these papers offer valuable insights into the advancements and challenges in the field of AI research, paving the way for further innovation and growth.