In Short:

In the latest AI research, xAI open-sourced its Grok-1 model, the largest yet. A model named Claude-3 is showing promising performance similar to GPT-4. Additionally, new projects like Open-Sora 1.0, Eagle 7B, Mosaic’s 132 billion parameter DBRX, and AI21’s Jamba were announced. Insights were also shared on continual pretraining of LLMs to keep them updated. Reward modeling aligns LLMs with human preferences for better performance and safety.

AI Research Highlights for the Month

It’s another month in AI research, and many significant announcements have been made. xAI has open-sourced its Grok-1 model, setting a new record for the largest open-source model with 314 billion parameters. Additionally, reports indicate that Claude-3 is rivaling or surpassing the performance of GPT-4. Other notable announcements include Open-Sora 1.0, Eagle 7B, Mosaic’s DBRX model, and AI21’s Jamba.

Focus on Research Papers

Since detailed information about these models is limited, let’s dive into discussions surrounding research papers. This month, let’s explore a paper that delves into pretraining strategies for Large Language Models (LLMs) and the use of reward modeling in reinforcement learning with human feedback.

Continual Pretraining for LLMs

Continual pretraining is crucial for updating existing LLMs with new data and knowledge, ensuring they stay current and adaptable to new domains without the need for retraining from scratch.

Pretraining Methods Comparison

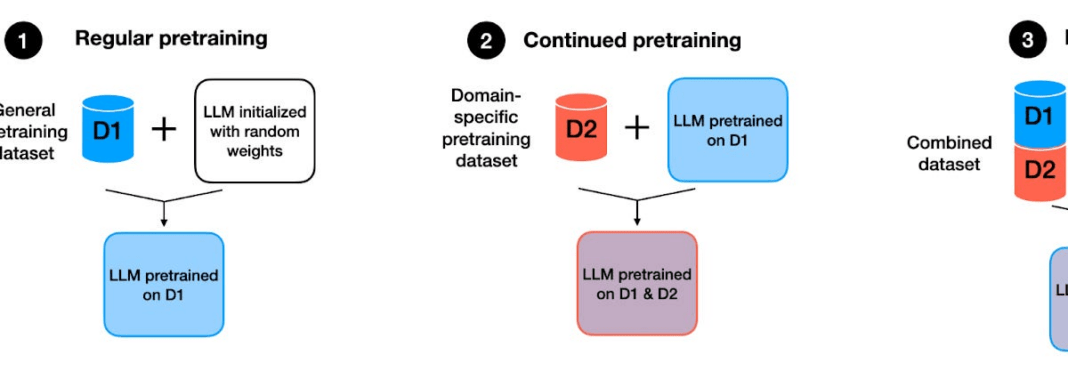

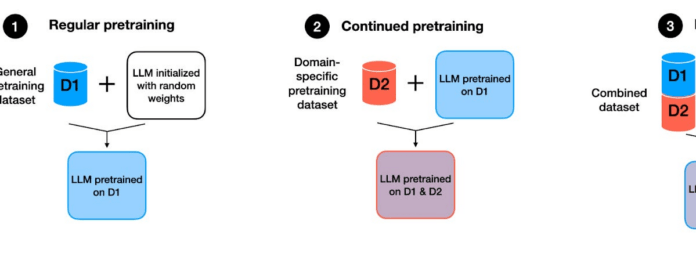

A recent paper provides insights into various pretraining methods for LLMs: regular pretraining, continued pretraining, and retraining on a combined dataset. The study reveals that continued pretraining, in which a pretrained model is further fine-tuned on new data, offers a more cost-effective and efficient approach compared to retraining from scratch.

Reward Modeling in Reinforcement Learning

Reward modeling plays a significant role in aligning LLMs with human preferences, aiding in safety and complex task adaptation. The paper introduces RewardBench, a benchmark for evaluating reward models used in RLHF to align pretrained language models with human preferences.

Comparison and Results

The benchmark assesses the performance of reward models and DPO models, indicating that many DPO models excel due to their simplicity compared to RLHF with a dedicated reward model. The study highlights the correlation between reward accuracy and model size, shedding light on the effectiveness of these models.

Final Thoughts

The research landscape in the AI field continues to evolve with new strategies and benchmarks introduced regularly. As researchers push the boundaries of AI capabilities, it’s crucial to analyze and adapt these advancements for practical applications.

Whether it’s refining pretraining methods for LLMs or optimizing reward models for reinforcement learning, each study contributes valuable insights to the broader AI community. Stay tuned for more updates and innovations in the ever-evolving field of artificial intelligence.