In Short:

In April 2024, several major transformer-based LLM model releases occurred, including Mixtral, Llama 3, Phi-3, and OpenELM. A new study compared the effectiveness of DPO and PPO for LLM alignment, finding that PPO generally outperforms DPO. Additionally, other interesting research papers in April covered various topics related to large language models and their applications. Overall, it has been an exceptional month for LLM research and new model releases.

April 2024: Major LLM Releases

In April 2024, prominent transformer-based LLM models were released by Mixtral, Meta AI, Microsoft, and Apple. Let’s delve into the details of these new releases and the impact they are expected to have on the industry.

Mixtral 8x22B by Mistral AI

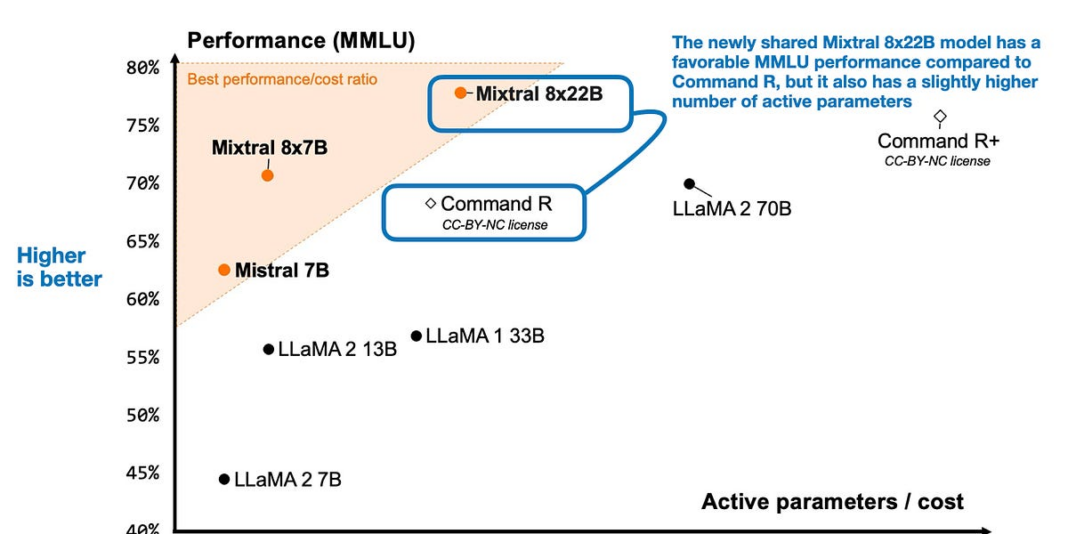

Mixtral, the latest mixture-of-experts (MoE) model by Mistral AI, is designed to enhance transformer architectures with 8 expert layers. Released under an Apache 2.0 open-source license, Mixtral 8x22B follows the success of its predecessor, Mixtral 8x7B, released in January 2024.

Key Highlights:

- Designed to improve modeling performance on the Measuring Massive Multitask Language Understanding (MMLU) benchmark

- Utilizes a unique MoE approach with 8 expert layers for enhanced performance

Meta AI’s Llama 3 Models

The Llama 3 models from Meta AI represent a significant advancement in openly available LLM technology. Building on the success of Llama 2, these models boast improved vocabulary size and grouped-query attention for enhanced performance.

Key Features:

- Larger dataset training size of 15 trillion tokens

- Available in 8B and 70B size ranges

Microsoft’s Phi-3 LLM

Microsoft’s Phi-3 LLM, based on the Llama architecture, has garnered attention for its impressive performance despite being trained on fewer tokens than Llama 3. Notably, Phi-3 emphasizes dataset quality over quantity for optimal results.

Key Points:

- Outperforms Llama 3 with a smaller dataset size of 3.3 trillion tokens

- Utilizes heavily filtered web data and synthetic data for enhanced performance

Apple’s OpenELM LLM Family

Apple introduces the OpenELM suite, offering small LLM models designed for deployment on mobile devices. With efficient training and inference frameworks, OpenELM aims to provide accessible LLM solutions for a wide range of applications.

Key Features of OpenELM:

- Available in 270M, 450M, 1.1B, and 3B sizes

- Introduces instruct-version models trained with rejection sampling and direct preference optimization

DPO vs. PPO for LLM Alignment

A comprehensive study delves into the effectiveness of Direct Preference Optimization (DPO) and Proximal Policy Optimization (PPO) in LLM alignment via reinforcement learning with human feedback. The study suggests that PPO generally outperforms DPO, with specific considerations for optimal implementation based on the task requirements.

Main Findings:

- PPO exhibits superior performance compared to DPO when applied correctly

- DPO may struggle with out-of-distribution data, requiring additional supervision for optimal results

Conclusion

With the advancements in major LLM releases and research studies, April 2024 marks a significant month for the development of transformer-based models. Each model discussed offers unique strengths and applications, contributing to the evolving landscape of language modeling technologies.