In Short:

Researchers are exploring AI’s ability to generate video game sequences, focusing on the classic Super Mario Bros. using a model called MarioVGG. While the AI can create basic animations of Mario running and jumping, it struggles with glitches and slow processing speeds. Despite limitations, the project offers promising insights into the future of AI-generated gaming, potentially revolutionizing game development.

Last month, Google‘s GameNGen AI model demonstrated the application of generalized image diffusion techniques to create a playable version of Doom. Building on these innovations, researchers are utilizing similar methods with a model named MarioVGG to explore the potential of AI in generating plausible video for Super Mario Bros. in response to user commands.

Model Performance and Capabilities

The findings from the MarioVGG model—detailed in a preprint paper released by the AI company Virtuals Protocol—reveal several limitations, including noticeable glitches and slow processing speeds that compromise real-time gameplay. Despite these challenges, the results indicate that even a constrained model can deduce impressive physics and dynamics from a modest dataset.

Aiming for Future Developments

The researchers envision this as a foundational step towards creating a “reliable and controllable video game generator”, and potentially “replacing game development and game engines” with video generation models in the long term.

Training with Extensive Data

To develop their model, the MarioVGG team (comprising GitHub contributors erniechew and Brian Lim) utilized a public dataset of Super Mario Bros. gameplay, encompassing 280 levels with over 737,000 frames of input and image data. The researchers employed methods to preprocess these frames into 35-frame chunks, allowing the model to learn the expected results of various inputs.

Simplified Gameplay Dynamics

To streamline the gameplay experience, only two input types were focused on: “run right” and “run right and jump.” This focused dataset, however, posed challenges for the machine-learning system, necessitating a backward analysis of several frames to determine the start of movement. Any jumps involving mid-air adjustments were excluded to maintain training data integrity.

Generating Video Sequences

Following a preprocessing phase and approximately 48 hours of training on a single RTX 4090 graphics card, the researchers applied a standard convolution and denoising process to create new video frames based on a static game image and textual inputs. Although the generated sequences are limited to a few frames, they can be concatenated to produce longer videos that maintain a degree of coherent gameplay.

Video Quality and Limitations

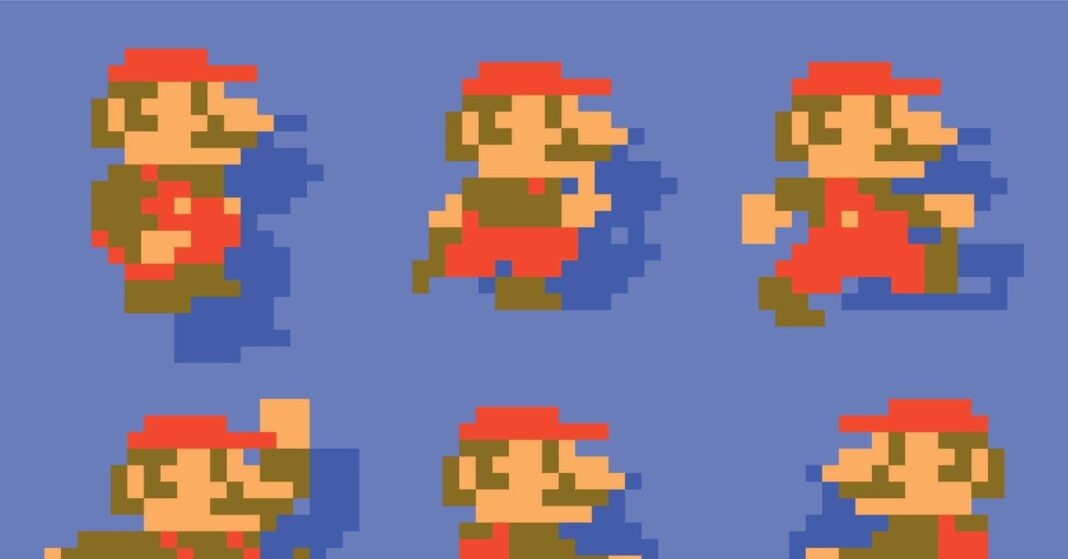

Despite these advancements, the MarioVGG model does not produce high-quality video comparable to traditional NES games. To enhance efficiency, the output frames have been downscaled from the original NES resolution of 256×240 to a muddier 64×48. Additionally, the model compresses 35 frames of gameplay into just seven generated frames, resulting in a rougher appearance. Currently, the generation of a six-frame video sequence takes up to six seconds, rendering it impractical for real-time applications.

Limitations and Glitches

As with many probabilistic AI models, MarioVGG exhibits quirks that can lead to unhelpful outputs. Occasionally, the model disregards user input prompts, and visual glitches can occur, such as Mario interacting with obstacles in unrealistic ways. Included in the shared examples is a particularly peculiar scenario where Mario transforms into a Cheep-Cheep while falling through a bridge.

Future Enhancements

The researchers believe that extending training time and incorporating diverse gameplay data could alleviate some of the significant issues encountered by MarioVGG. Nevertheless, this project stands as a compelling proof of concept, showcasing that even with limited training data, effective models for basic video game simulation can be developed.

This information originally appeared on Ars Technica.