In Short:

MIT researchers developed a tool called SymGen to help people quickly verify information generated by large language models (LLMs). Often, LLMs make errors, and verifying their responses can be tedious. SymGen simplifies this process by linking specific citations directly to the source data, making it easier to check facts. User studies showed it speeds up verification by about 20%, boosting confidence in AI outputs.

Large Language Models (LLMs) possess remarkable capabilities; however, they are not without their shortcomings. A significant issue known as “hallucination” occurs when these AI models generate incorrect or unsupported information in response to queries.

Given the potential consequences of inaccuracies, particularly in high-stakes environments such as healthcare and finance, the responses of LLMs are frequently verified by human fact-checkers. Unfortunately, the existing validation processes can be tedious and prone to errors, as they often require individuals to sift through lengthy documents cited by the model, which can deter users from implementing generative AI solutions.

Introduction of SymGen

To alleviate this challenge, researchers from MIT have developed a user-friendly system called SymGen. This innovative tool streamlines the verification process by enabling users to quickly assess the accuracy of an LLM’s responses, with citations pointing precisely to the relevant sections in a source document, such as a specific cell in a database.

Users can hover over highlighted portions of the text response to view the data utilized by the model for generating specific terms or phrases. In contrast, unhighlighted sections of text indicate which phrases require further scrutiny to verify their accuracy.

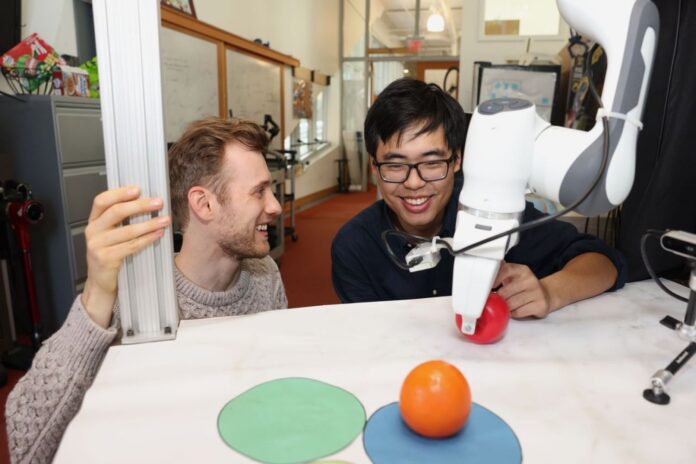

“We provide users the ability to selectively focus on parts of the text that require closer examination. Ultimately, SymGen enhances user confidence in the model’s responses by facilitating a straightforward verification process,” remarked Shannon Shen, an electrical engineering and computer science graduate student and co-lead author of a research paper on SymGen.

Efficiency in Verification

A user study conducted by Shen and his collaborators revealed that SymGen reduced verification time by approximately 20 percent when compared to traditional methods. By expediting the validation process for human users, SymGen offers a valuable resource for identifying errors in LLMs across various real-world applications, including the generation of clinical notes and financial market summaries.

Shen is joined in this research by co-lead author and fellow EECS graduate student Lucas Torroba Hennigen; EECS graduate student Aniruddha “Ani” Nrusimha; Bernhard Gapp, president of the Good Data Initiative; and senior authors David Sontag, a professor of EECS and a member of the MIT Jameel Clinic, along with Yoon Kim, an assistant professor of EECS and a member of CSAIL. This research was recently showcased at the Conference on Language Modeling.

The Role of Symbolic References

Many LLMs are designed to generate citations alongside their responses to facilitate validation, yet these verification systems are often developed without adequately addressing the challenges faced by users when perusing numerous citations. “Generative AI should minimize the time users spend on tasks. If verifying model outputs requires extensive reading of documents, the utility of the AI is diminished,” Shen stated.

The research team approached the validation issue with a focus on the human element by implementing an intermediate step in the data reference process. A SymGen user begins by providing the LLM with data to reference, such as a table containing statistics. Instead of directly generating a response, the model is prompted to create its output in a symbolic format, ensuring precise references to the source data.

For example, when the model needs to reference the phrase “Portland Trailblazers,” it cites the corresponding cell in the data table rather than outputting the phrase directly. “By using this intermediate symbolic format, we achieve fine-grained references, allowing us to pinpoint the data correspondence for every section of text in the output,” explained Torroba Hennigen.

Streamlining Validation

The ability to create symbolic responses is tied to how LLMs are trained, utilizing vast amounts of data presented in “placeholder format.” The structure of the prompts used by SymGen leverages these inherent capabilities of LLMs.

A user study indicated that the majority of participants found SymGen significantly improved the ease of verifying LLM-generated text, allowing them to validate model outputs approximately 20 percent faster than through conventional methods. However, it is noteworthy that the effectiveness of SymGen is contingent upon the quality of the source data. The LLM has the potential to cite incorrect information, and human verifiers may not always recognize implications of such missteps.

Moreover, users must provide source data in a structured format, such as a table, for SymGen to operate. At present, the system is limited to tabular data, but the researchers are actively working to enhance SymGen’s capabilities to handle arbitrary text and other data formats. Future applications could include validating AI-generated summaries of legal documents or improving accuracy in clinical summaries generated for medical professionals.

This research is partially supported by Liberty Mutual and the MIT Quest for Intelligence Initiative.