In Short:

MIT researchers discovered that large language models (LLMs) can effectively detect anomalies in the time-series data of wind turbines, offering a cost-saving alternative to traditional deep-learning methods. They developed a framework called SigLLM, which processes data into text for the model to analyze. While LLMs aren’t as strong as state-of-the-art models yet, they performed well and could simplify anomaly detection tasks in various fields.

Identifying a single faulty turbine in a wind farm necessitates analyzing hundreds of signals and millions of data points, a task that can be likened to finding a needle in a haystack. Engineers often navigate this complexity through the utilization of deep-learning models, which can effectively detect anomalies in measurements taken continuously over time by each turbine, known as time-series data.

However, with hundreds of wind turbines operating and recording numerous signals every hour, training a deep-learning model for analyzing time-series data becomes both costly and cumbersome. The challenge is further exacerbated by the need to retrain the model post-deployment, which places a burden on wind farm operators who may not possess the requisite machine-learning expertise.

Innovative Solution: Large Language Models

In a recent study, researchers from MIT identified the potential of large language models (LLMs) to function as more efficient anomaly detectors for time-series data. Significantly, these pretrained models can be deployed immediately, minimizing the need for extensive fine-tuning.

The researchers introduced a framework called SigLLM, which incorporates a component capable of converting time-series data into text-based inputs suitable for LLM processing. Users can then input this prepared data into the model, prompting it to identify anomalies. Additionally, the LLM can forecast future time-series data points as part of the anomaly detection pipeline.

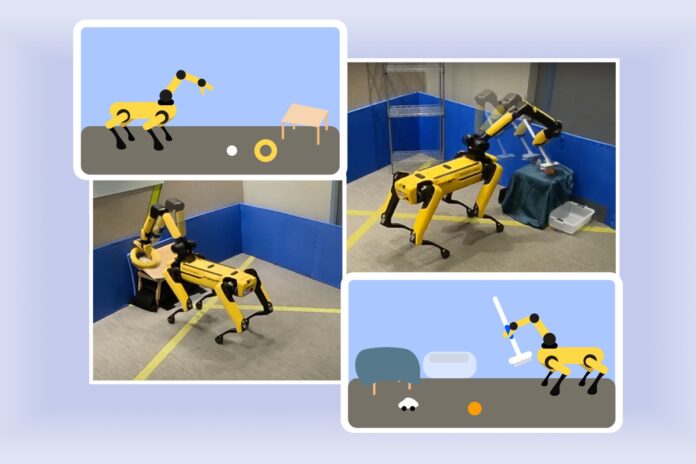

While LLMs did not outperform state-of-the-art deep-learning models in anomaly detection, their performance was comparable to several other AI methods. Should researchers succeed in enhancing LLM performance, this framework could empower technicians to detect potential issues in equipment such as heavy machinery or satellites before they arise, thereby eliminating the need to train a costly deep-learning model.

“Since this is just the first iteration, we didn’t expect to achieve perfection initially, but these results indicate a promising opportunity to leverage LLMs for complex anomaly detection tasks,” stated Sarah Alnegheimish, a graduate student in electrical engineering and computer science (EECS) and the lead author of a paper on SigLLM.

Her co-authors include Linh Nguyen, also an EECS graduate student; Laure Berti-Equille, a research director at the French National Research Institute for Sustainable Development; and Kalyan Veeramachaneni, a principal research scientist in the Laboratory for Information and Decision Systems. Their research is set to be presented at the IEEE Conference on Data Science and Advanced Analytics.

An Off-the-Shelf Solution

Large language models are autoregressive, meaning they can recognize that the latest values in sequential data depend on prior values. For instance, models such as GPT-4 can predict the next word in a sentence based on the preceding words.

Given that time-series data are inherently sequential, the researchers posited that the autoregressive characteristics of LLMs might render them particularly effective in detecting anomalies within this data structure. Their objective was to devise a technique that eschews fine-tuning; instead, they intended to implement an LLM as is, without any additional training.

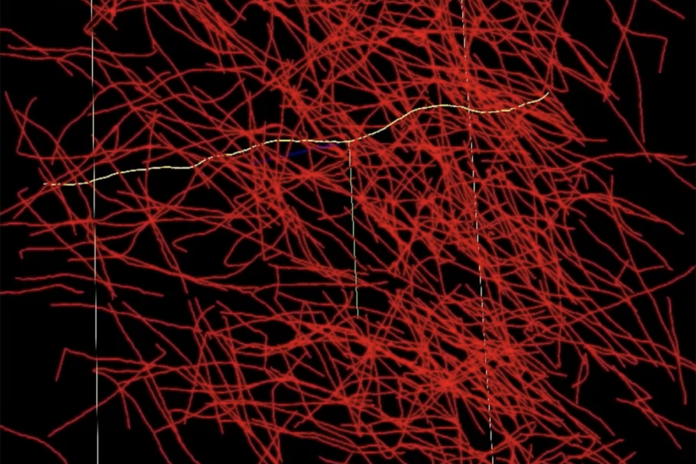

To achieve this deployment, the researchers first transformed time-series data into text-based inputs manageable by the language model. They executed this through a series of transformations aimed at encapsulating the most critical elements of the time series while minimizing token usage. Tokens serve as the fundamental inputs for an LLM, with an increase in token count necessitating more computational resources.

“If you don’t handle these steps meticulously, you might inadvertently omit critical data, thereby losing important information,” Alnegheimish emphasized.

Two Approaches to Anomaly Detection

With a clear method for transforming time-series data established, the researchers developed two approaches for anomaly detection.

First, termed Prompter, the prepared data is fed into the model, which is prompted to identify anomalous values. “We had to iterate multiple times to ascertain the correct prompts for specific time series. Understanding how these LLMs ingest and process data is challenging,” Alnegheimish noted.

The second approach, named Detector, employs the LLM as a forecaster to predict the next value in a time series. Here, the researchers compare the predicted value to the actual value; a significant discrepancy indicates that the real value is likely an anomaly. In practice, Detector proved superior to Prompter, which generated many false positives.

“I believe that with the Prompter approach, we were challenging the LLM too extensively, making it tackle a more complex problem,” explained Veeramachaneni.

When the two approaches were juxtaposed with contemporary techniques, Detector surpassed transformer-based AI models on seven out of the eleven datasets evaluated, all while requiring no training or fine-tuning.

Looking ahead, the researchers aim to explore whether fine-tuning can enhance performance, albeit with the acknowledgment that this would necessitate additional time, cost, and expertise for training. Their current LLM methods take between 30 minutes to two hours to yield results, indicating that increasing speed is an essential area for future development. They also intend to investigate LLM capabilities more deeply to glean insights into how they execute anomaly detection, with hopes of identifying ways to improve their performance.

“When contemplating complex tasks such as anomaly detection in time series, LLMs certainly emerge as formidable contenders. It raises the question of whether other intricate challenges could also be addressed using LLMs,” Alnegheimish remarked.

This research received support from SES S.A., Iberdrola, ScottishPower Renewables, and Hyundai Motor Company.