In Short:

MIT researchers have developed a new technique called “Diffusion Forcing” that improves AI sequence models, combining benefits of next-token and diffusion models. This method allows robots to ignore distractions and perform tasks more accurately, while also generating high-quality videos. By refining how models handle noisy data, Diffusion Forcing enhances decision-making and planning for AI agents, with future applications in robotics and video generation.

The increasing prominence of sequence models in artificial intelligence (AI) is evident as they demonstrate remarkable capabilities in data analysis and predictive tasks. A notable example of this is ChatGPT, which utilizes next-token prediction models to generate responses by anticipating each subsequent word in a sequence. Furthermore, full-sequence diffusion models, such as Sora, innovate by transforming textual input into realistic and captivating visuals through a process of sequential “denoising” of video content.

Recently, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) proposed a significant modification to the diffusion training methodology, enhancing flexibility in sequence denoising.

Capability Trade-offs

Both next-token models and full-sequence diffusion models exhibit distinct trade-offs when applied to areas like computer vision and robotics. While next-token models can create sequences of varying lengths, they are limited by their inability to anticipate favorable states far into the future—making long-term planning challenging. Conversely, diffusion models can achieve future-conditioned sampling but do not possess the variable-length sequence generation capability inherent to next-token models.

Introducing Diffusion Forcing

In an effort to amalgamate the strengths of these models, the CSAIL researchers developed a training technique known as “Diffusion Forcing.” This technique derives its name from “Teacher Forcing,” a traditional training method that simplifies full sequence generation into manageable next-token generation tasks.

Diffusion Forcing identifies a convergence point between diffusion models and teacher forcing by employing training formats that predict masked (noisy) tokens from their unmasked counterparts. Specifically, diffusion models introduce noise incrementally, akin to fractional masking. The Diffusion Forcing method empowers neural networks to refine a set of tokens by reducing noise levels while concurrently predicting subsequent tokens. The outcome is a highly versatile sequence model, yielding superior quality in artificial video production and facilitating more accurate decision-making by robots and AI agents.

Applications of Diffusion Forcing

By methodically sifting through noisy data and accurately anticipating subsequent actions, Diffusion Forcing enables robots to disregard visual distractions and successfully execute manipulation tasks. Additionally, it can produce stable and coherent video sequences while guiding AI agents through complex digital environments. This methodology holds the potential to enhance the adaptability of household and factory robots to new tasks while elevating the quality of AI-generated entertainment.

“Sequence models aim to condition on the known past and predict the unknown future, utilizing a form of binary masking. However, masking does not need to adhere to a binary format,” remarked Boyuan Chen, lead author, PhD student in Electrical Engineering and Computer Science (EECS) at MIT and CSAIL member. “Through Diffusion Forcing, we apply various levels of noise to each token, effectively establishing a form of fractional masking. During testing, our system can ‘unmask’ a collection of tokens and diffuse a sequence for the immediate future at a reduced noise level, allowing it to determine reliable data despite encountering out-of-distribution inputs.”

Numerous experiments have demonstrated that Diffusion Forcing excels at ignoring extraneous data to fulfill tasks while anticipating future actions.

Experimentation with Robots

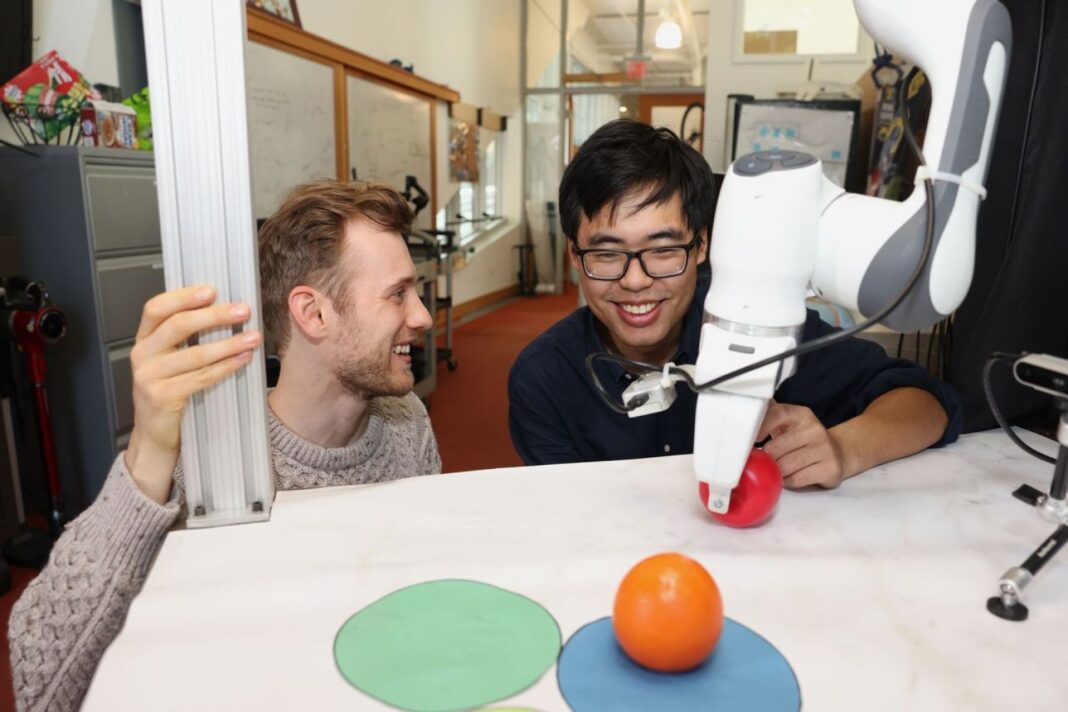

In one experiment involving a robotic arm, Diffusion Forcing successfully achieved the task of transferring two toy fruits across three circular mats—illustrating its efficacy in managing long-horizon tasks that necessitate memory. The researchers accomplished this by teleoperating the robot in a virtual reality setting, enabling it to mimic the movements captured through its camera. Despite initial random positions and distractions, such as obstructing items, the robot accurately placed the objects in their designated spots.

For video generation, Diffusion Forcing was trained using gameplay footage from Minecraft and dynamic environments within Google’s DeepMind Lab Simulator. Upon receiving a single frame of input, this technique surpassed comparable models like Sora and ChatGPT by producing more stable, high-resolution videos. Previous models often yielded inconsistent results and failed to maintain functionality beyond 72 frames.

Future Prospects

Beyond its video generation capabilities, Diffusion Forcing also functions as a motion planner, steering towards desired outcomes efficiently. Its flexibility allows for the generation of plans with varying time horizons, performing tree searches while understanding the inherent uncertainty of distant future actions. In a task involving a 2D maze, Diffusion Forcing demonstrated superior performance by generating quicker plans to reach goal locations, showcasing its potential efficacy as a planning tool for robotic applications.

Throughout various demonstrations, Diffusion Forcing seamlessly transitioned between full sequence modeling and next-token prediction. According to Chen, this adaptable framework could ultimately serve as a powerful foundation for a “world model,” an AI system capable of simulating real-world dynamics through training on vast amounts of online video content. This advancement may empower robots to undertake novel tasks based on environmental cues. For instance, if instructed to open a door without prior training, the model could create a visual guide to demonstrate the needed actions.

The research team is currently exploring methods to scale their approach using larger datasets and leading transformer models to enhance performance. Their ambitions include developing a ChatGPT-like robot brain that could enable robots to perform tasks in unfamiliar environments independently, without requiring human demonstration.

“With Diffusion Forcing, we are taking significant strides in bridging video generation and robotics,” stated Vincent Sitzmann, senior author, MIT assistant professor, and CSAIL member. “Ultimately, our goal is to leverage the wealth of information encapsulated in online videos to empower robots in daily life. However, various research challenges remain, particularly in understanding how robots can effectively imitate human actions, even when their physical structures differ significantly from ours!”

Chen and Sitzmann co-authored the paper with recent visiting researcher Diego Martí Monsó, and CSAIL affiliates, including Yilun Du, Max Simchowitz, and Russ Tedrake. Their research received funding from several organizations including the U.S. National Science Foundation, the Singapore Defence Science and Technology Agency, and Intelligence Advanced Research Projects Activity through the U.S. Department of the Interior. This work is set to be presented at NeurIPS in December.