In Short:

MIT researchers have created a game-like “consensus game” to help AI understand and generate text better. The goal is to improve the AI’s ability to give correct and coherent answers by treating the interaction as a game where two parts of the AI work together to agree on the right message. The new approach called “equilibrium ranking” saw significant improvement across various tasks, outperforming larger models.

MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers have developed a new approach to help AI systems understand and generate text more effectively. This approach, called a “consensus game,” involves two parts of an AI system working together to agree on the right message. By treating this interaction as a game, researchers found that they could significantly improve the AI’s ability to provide correct and coherent answers across various tasks.

Game-based Approach

The traditional methods of generative and discriminative querying sometimes lead to conflicting results. The researchers introduced a new training-free, game-theoretic method where a generator and a discriminator work together using natural language to reach a consensus on the right message. This approach, called “equilibrium ranking,” showed promising results in improving the reliability and consistency of language models.

Consensus Game System

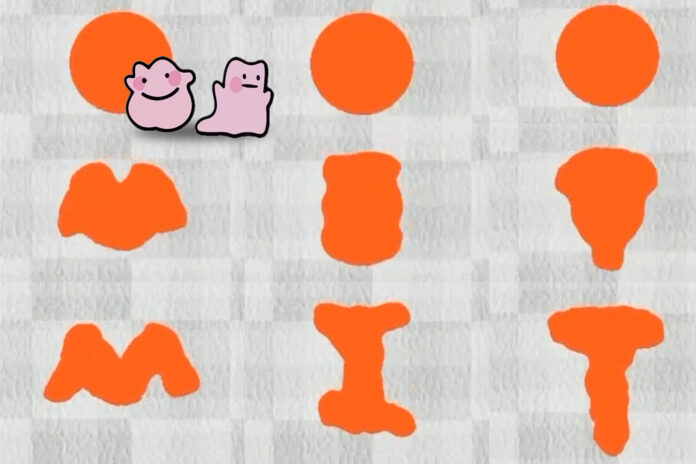

Inspired by the strategic board game “Diplomacy,” researchers created “Cicero,” an AI agent that excelled in a seven-player game requiring complex negotiation skills. The consensus game system ensures accuracy and fidelity by iteratively adjusting interactions between generative and discriminative components until they reach a consensus on answers that reflect reality and align with initial beliefs.

Challenges and Future Directions

Implementing the consensus game approach presents computational challenges, especially for question-answering tasks. While the system showed improvements in various areas, it struggled with generating wrong answers for math word problems. Future work involves enhancing the base model by integrating the current method’s outputs to improve performance across different tasks.

Expert Insights

Google Research Scientist Ahmad Beirami acknowledges the innovation of the MIT researchers in decoding language models through a game-theoretic framework. The proposed method shows significant performance gains, opening doors to new applications and potential paradigm shifts in language model decoding.

Research Recognition

The team of researchers presented their work at the International Conference on Learning Representations (ICLR) and received a “spotlight paper” recognition. Additionally, their research garnered a “best paper award” at the NeurIPS R0-FoMo Workshop in December 2023.