In Short:

Researchers from MIT, Mass General Hospital, and Harvard have developed “ScribblePrompt,” a tool that uses AI to quickly segment medical images like MRIs and ultrasounds. Instead of needing countless labeled images, it simulates user input for training. The tool is interactive, allowing corrections, and improves efficiency by 28%. It has been favored by neuroimaging experts for its accuracy and ease of use.

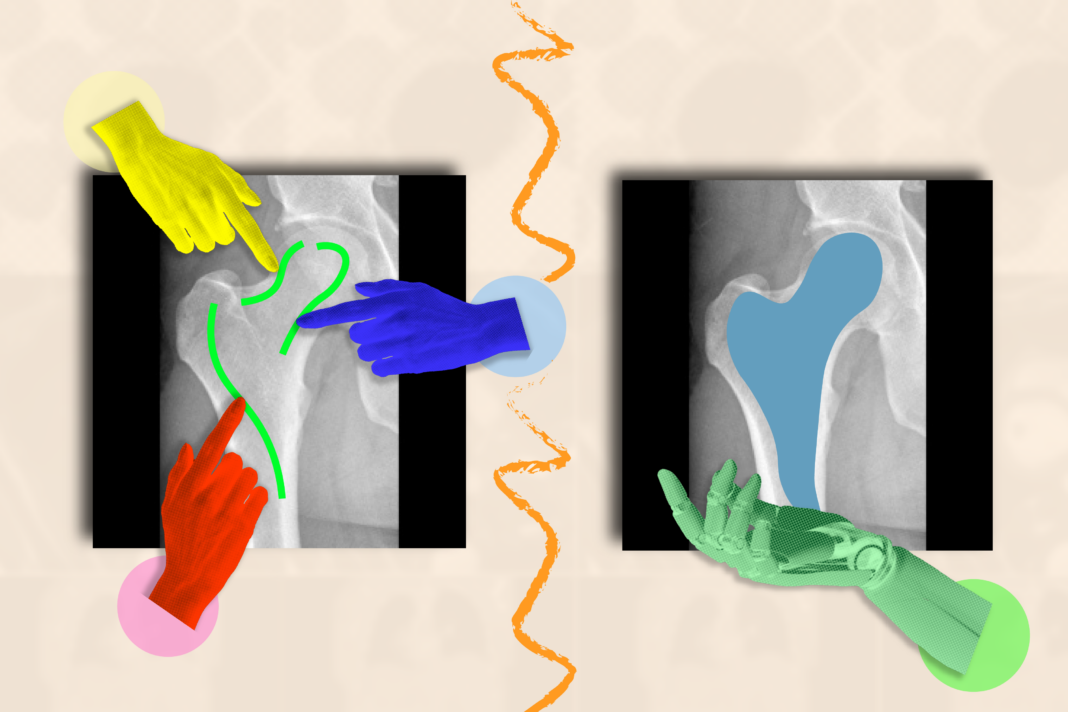

Medical imaging, such as MRI or X-ray scans, can often appear as indistinct black-and-white patterns, making it challenging to identify distinct biological structures, such as tumors.

AI in Medical Imaging

Artificial intelligence (AI) systems can be trained to define regions of interest in medical images, greatly assisting doctors and biomedical professionals in identifying diseases and abnormalities. By automating the labor-intensive process of tracing anatomical structures across numerous images, these AI systems can save valuable time.

The Challenge of Data Annotation

A significant hurdle in developing effective AI models is the extensive need for labeled images for training. For instance, annotating the cerebral cortex across numerous MRI scans is essential to train a supervised model to comprehend the variations in cortex shapes across different individuals.

Introducing ScribblePrompt

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), Massachusetts General Hospital (MGH), and Harvard Medical School have introduced an interactive framework called ScribblePrompt. This innovative tool allows for rapid segmentation of various medical images, including those it has not encountered before.

Methodology

Instead of requiring manual labeling of images, the research team simulated user annotations across more than 50,000 scans—ranging from MRIs to ultrasounds—across various anatomical structures. They employed algorithms to mimic how users would scribble or click different regions in medical images, utilizing superpixel algorithms to discover new regions of interest. This synthetic approach prepared ScribblePrompt for real-world segmentation requests from users.

Impact on Medical Practice

According to Hallee Wong, a PhD student at MIT and the lead author of a recent paper about ScribblePrompt, “AI has significant potential in analyzing images and other high-dimensional data to enhance human productivity. Our goal is to augment the efforts of medical workers through an interactive system.” ScribblePrompt has proved to be more efficient and precise compared to existing interactive segmentation methods, reducing annotation time by 28% compared to Meta’s Segment Anything Model (SAM).

User-Friendly Interface

The ScribblePrompt interface allows users to easily indicate areas for segmentation through simple scribbles or clicks. For example, users can click on specific veins in a retinal scan or utilize bounding boxes to define structures. The tool further enhances its accuracy by allowing user feedback for adjustments.

Results from User Studies

In user studies conducted among neuroimaging researchers at MGH, ScribblePrompt’s self-correcting features were preferred by 93.8% of participants over SAM for segment enhancement. Additionally, 87.5% favored ScribblePrompt for click-based edits.

Training and Evaluation

ScribblePrompt was trained on a substantial dataset comprising 54,000 images across 65 datasets. This included a diverse range of medical images such as CT scans and X-rays. Evaluation across 12 new datasets demonstrated that ScribblePrompt outperformed four existing segmentation methods, excelling in both efficiency and accuracy.

Importance of ScribblePrompt

As emphasized by senior author Adrian Dalca, a research scientist at CSAIL and an assistant professor at MGH and Harvard Medical School, “Segmentation is a prevalent task in biomedical image analysis, essential in both clinical and research settings. ScribblePrompt has been meticulously designed to offer practical utility for clinicians and researchers, significantly expediting this crucial step.”

Expert Commentary

Bruce Fischl, a professor of radiology at Harvard Medical School and a neuroscientist at MGH, elaborated on the prevailing challenges in medical imaging. He noted that ScribblePrompt enhances manual annotation through an intuitive interface, greatly improving productivity.

Future Presentations and Support

The collaborative efforts of Wong and her colleagues will be showcased at the 2024 European Conference on Computer Vision, having already received recognition at the DCAMI workshop for ScribblePrompt’s clinical implications.