In Short:

MIT researchers have developed a new technique called Policy Composition (PoCo) to train robots to perform multiple tasks using a combination of data sources like simulation and human demos. This approach combines policies learned from different datasets using a generative AI model, leading to a 20 percent improvement in task performance. The method allows robots to adapt to new tasks and environments more efficiently, making advancements in robotics field.

Multipurpose Robot Training Technique Developed by MIT Researchers

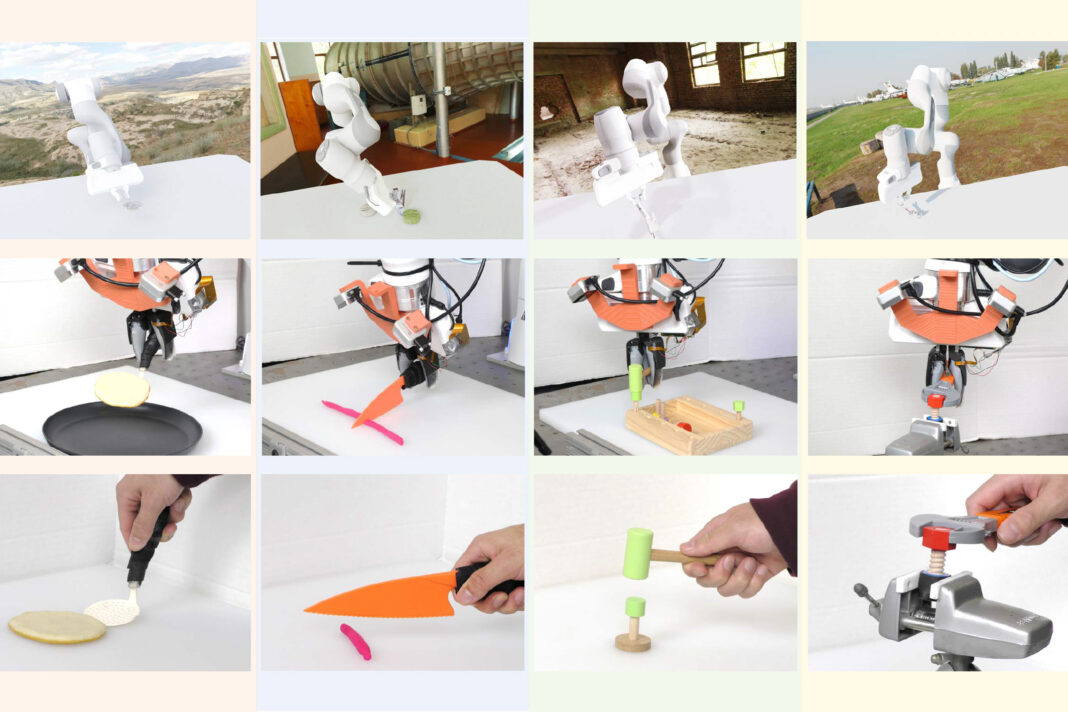

Training robots to perform multiple tasks with different tools requires a vast amount of data that showcases various scenarios. Existing robotic datasets come in different modalities, domains, and tasks, making it challenging to efficiently incorporate them into a single machine-learning model.

Policy Composition Technique:

Researchers at MIT have introduced a technique called Policy Composition (PoCo) that leverages generative AI known as diffusion models to combine data from diverse sources. By training separate diffusion models for different tasks and datasets, they were able to develop a general policy that enables a robot to perform multiple tasks and adapt to new environments.

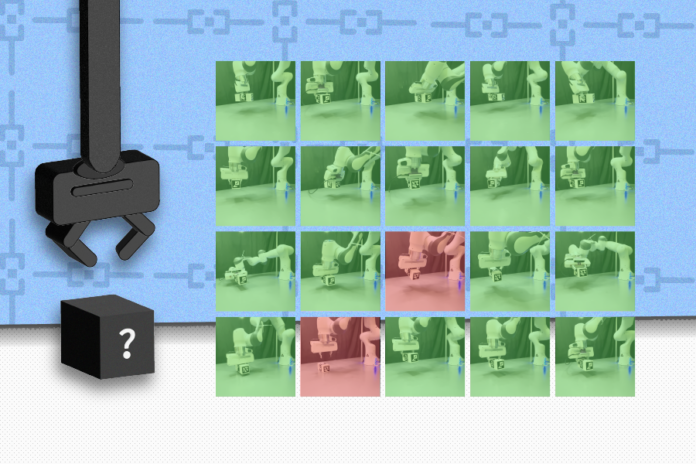

In simulations and real-world experiments, robots trained using PoCo showed a 20 percent improvement in task performance compared to traditional methods.

Lead author Lirui Wang and the team, including researchers from various disciplines, will present their findings at the Robotics: Science and Systems Conference.

Combining Disparate Datasets:

The researchers utilized diffusion models to represent different policies learned from various datasets, such as human video demonstrations and robotic arm teleoperation data. By combining these policies, the team achieved superior results by blending the strengths of each individual dataset.

Benefits of PoCo:

One of the key advantages of the Policy Composition approach is the ability to mix and match policies to optimize specific tasks. By training additional diffusion models with new data, users can enhance robot performance without starting from scratch.

The PoCo technique demonstrated significant improvement in task performance and showcased the potential to refine robotic trajectories for better outcomes.

In the future, the researchers aim to apply this technique to more complex tasks and incorporate larger robotics datasets to further enhance performance.

This research, funded by Amazon, Singapore Defense Science and Technology Agency, NSF, and Toyota Research Institute, represents a significant step in advancing multipurpose robot training techniques.