In Short:

Research from MIT’s CSAIL shows that large language models (LLMs) trained on text can understand the visual world to create complex scenes and self-correct their drawings when prompted. LLMs learn visual knowledge from internet descriptions and can render objects and scenes accurately. The study used a “vision checkup” to assess LLMs’ abilities, showing potential for training computer vision systems without actual visual data. The research aims to explore further integration of LLMs with AI tools for enhanced artistic capabilities.

Language Models Demonstrate Understanding of Visual World, Study Finds

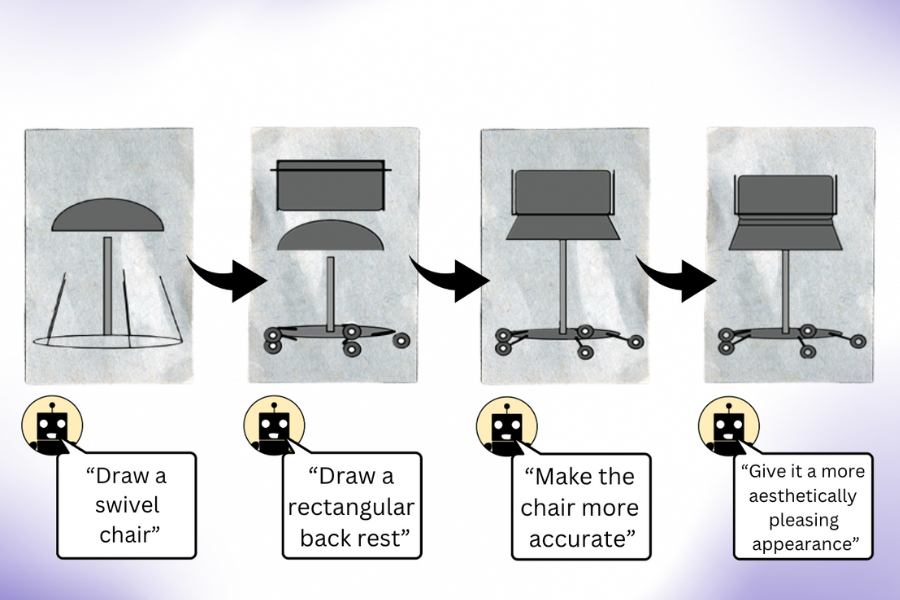

Language models (LLMs) trained solely on text show a remarkable grasp of the visual world, as researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) observed. These models can generate complex scenes and objects based on text prompts and refine their images with each query.

Visual Aptitude Dataset Evaluation

CSAIL researchers devised a “vision checkup” to test LLMs’ visual knowledge using their Visual Aptitude Dataset. By prompting the models to self-correct images and gather final drafts, a computer vision system trained to identify real photo contents was developed.

Creating Synthetic Data for Training Vision Systems

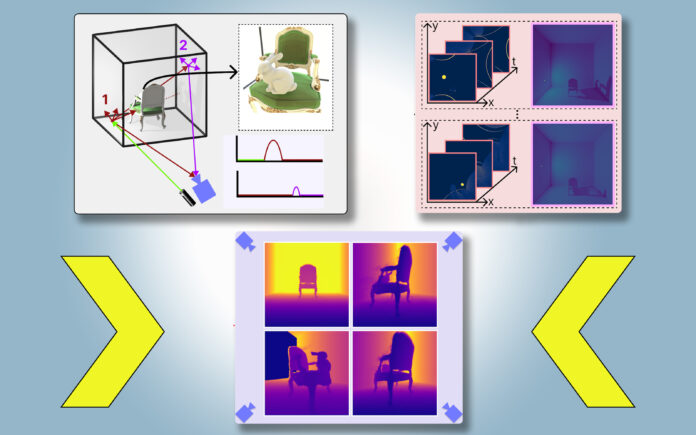

The team generated code for various shapes and scenes with LLMs and compiled them into digital illustrations. The models showed the ability to understand spatial relations and even create unique visual concepts such as a car-shaped cake and a glowing light bulb.

Enhancing Computer Vision Systems

By combining LLMs’ hidden visual knowledge with other AI tools, such as diffusion models, researchers believe they can improve the accuracy and artistic capabilities of computer vision systems. This collaborative approach could lead to more enhanced image editing and recognition.

Challenges and Future Research

While LLMs displayed creativity in drawing various concepts, they sometimes struggled to recognize the same concepts in real photos. The researchers plan to further explore the origins of LLMs’ visual knowledge and aim to train an improved vision model in the future.

Conclusion

The study, co-authored by researchers at MIT CSAIL, presents a novel approach to evaluating generative AI models in training computer vision systems. The team’s findings, supported by various grants and fellowships, will be presented at the IEEE/CVF Computer Vision and Pattern Recognition Conference.