In Short:

Researchers at MIT and the MIT-IBM Watson AI Lab are training computers to find specific actions in instructional videos using only video and transcripts without requiring hand-labeled data. The model they developed looks at spatial and temporal information to identify actions accurately in longer videos with multiple activities. This new technique could streamline online learning and health care diagnostics. The researchers are planning to enhance their approach further.

Teaching AI to Pinpoint Actions in Videos

The internet provides a plethora of instructional videos ranging from cooking tips to life-saving techniques. However, pinpointing specific actions in lengthy videos can be time-consuming. In an effort to streamline this process, scientists are working on teaching computers to identify actions in videos. This would allow users to simply describe the action they’re looking for, and an AI model would locate it in the video.

A new approach by researchers at MIT and the MIT-IBM Watson AI Lab trains a model to perform this task, known as spatio-temporal grounding, using only videos and their automatically generated transcripts. The model is trained to understand an unlabeled video by analyzing spatial details (object locations) and temporal context (when actions occur).

Efficient Methodology

Compared to traditional approaches, this method demonstrates superior accuracy in identifying actions in longer videos containing multiple activities. By simultaneously training on spatial and temporal information, the model becomes more adept at recognizing each aspect individually.

This technique not only enhances online learning processes but also holds potential for applications in healthcare settings, such as quickly locating key moments in diagnostic procedure videos.

Lead author Brian Chen emphasizes that combining spatial and temporal information separately enables the model to achieve optimal performance. The research findings will be presented at the Conference on Computer Vision and Pattern Recognition.

Global and Local Learning

Traditional approaches rely on human-annotated start and end times for specific tasks, which are expensive to generate and can be ambiguous. The new methodology utilizes unlabeled instructional videos and text transcripts from platforms like YouTube for training, eliminating the need for labor-intensive labeling.

By training the model to develop both global representations (high-level actions in the entire video) and local representations (fine-grained details in specific regions), the researchers achieve a more comprehensive understanding of actions in videos.

A New Benchmark

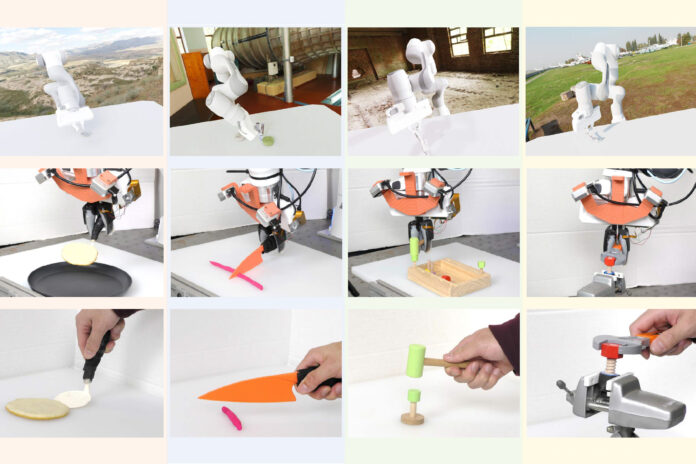

To evaluate their approach, the researchers constructed a benchmark dataset specifically designed for testing models on longer, unedited videos. They introduced a new annotation technique that enhances the identification of multi-step actions by marking specific object intersections.

Notably, their methodology surpasses other AI techniques in accurately pinpointing actions and focusing on human-object interactions, such as the precise moment of flipping a pancake onto a plate.

Future enhancements will involve incorporating audio data to capitalize on the correlations between actions and the sounds objects produce. This research is partially funded by the MIT-IBM Watson AI Lab.