In Short:

ChatGPT, a powerful AI model, was initially cloud-based due to its size. Now, a smaller version called Phi-3-mini by Microsoft is able to run on devices like laptops and smartphones. This new model is said to measure up to GPT-3.5 in performance tests. Microsoft’s announcement of a new multimodal AI model shows progress in creating efficient and capable AI applications that don’t rely on the cloud.

When ChatGPT was released in November 2023, it could only be accessed through the cloud because the model behind it was enormous.

Rapid Advancements in AI Models

Today, a similarly capable AI program called Phi-3-mini can run on devices like Macbook Air without overheating. This development signifies the rapid refinement of AI models to make them more efficient and compact. It also highlights that achieving larger scales isn’t the only way to enhance machine intelligence.

The Phi-3-mini model, part of a series of smaller AI models from Microsoft, is designed to run on smartphones but can also operate effectively on laptops. I tested this model on a laptop and accessed it from an iPhone using an app called Enchanted, offering a chat interface similar to the official ChatGPT app.

Comparison with GPT-3.5

In a paper discussing the Phi-3 models, Microsoft researchers claim that the model performed favorably compared to GPT-3.5 from OpenAI. This claim is supported by its performance on standard AI benchmarks evaluating common sense and reasoning.

Latest Announcement from Microsoft

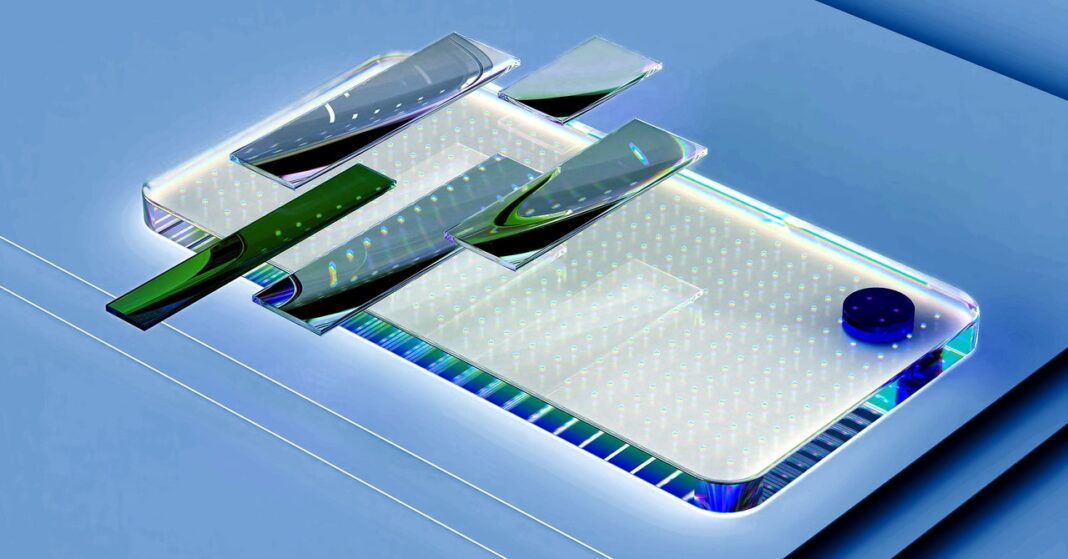

Microsoft recently introduced a new “multimodal” Phi-3 model at its annual developer conference, Build, capable of handling audio, video, and text. This announcement follows similar initiatives by OpenAI and Google to develop AI assistants built on multimodal models accessed via the cloud.

New Possibilities with Local AI Models

The compact Phi family of AI models by Microsoft indicates a shift towards building AI applications that are less reliant on the cloud. This shift could lead to new use cases, enabling more responsive and private applications. (For example, Microsoft’s Recall feature leverages offline algorithms to make all user interactions searchable.)

Sébastien Bubeck, a researcher at Microsoft, notes that the Phi models were developed to explore the impact of selective training on improving AI abilities.

Large language models like GPT-4 or Gemini from Google