In Short:

This month, research covered three new papers on instruction finetuning and parameter-efficient finetuning with LoRA in large language models. A new paper questions the common practice of masking instructions when calculating losses, finding better performance without masking. Another paper introduces MoRA, a method for high-rank updating in finetuning, outperforming LoRA in some scenarios. These findings help improve efficiency and performance in language model training.

Latest Research in Large Language Models (LLMs)

This month, we delve into three new papers focusing on instruction finetuning and parameter-efficient finetuning using LoRA in Large Language Models (LLMs). As someone who works extensively with these methods, it’s always intriguing to see fresh research shedding practical insights.

Current Developments

This month’s article may be shorter than usual due to my ongoing work on the final chapter of my book, “Build a Large Language Model From Scratch.” Additionally, I am gearing up for a virtual ACM Tech Talk on LLMs this Wednesday. All interested parties are welcome to join this free event!

Instruction Tuning With Loss Over Instructions

One paper that stood out this month is “Instruction Tuning With Loss Over Instructions.” The study challenges the conventional practice in instruction finetuning of masking the instruction when calculating the loss. Let’s delve deeper into this paper’s findings.

Overview of Instruction Finetuning

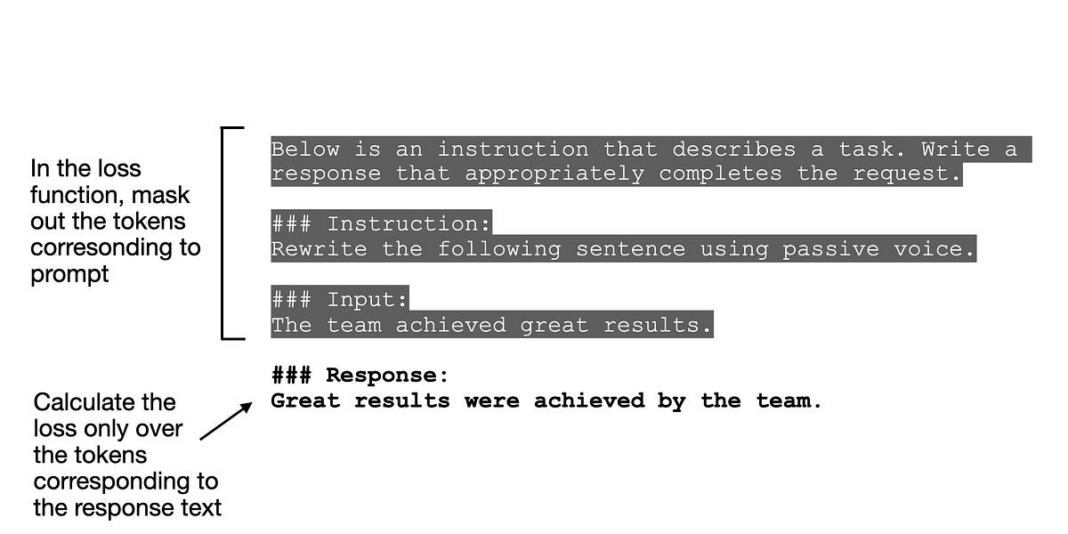

Instruction finetuning involves enhancing pretrained LLM responses to adhere to specific instructions (e.g., “Summarize this article,” “Translate this sentence,” etc.). A common practice during instruction finetuning is to mask out the instruction itself when determining the loss.

The standard approach includes masking the input prompt during training, with popular LLM libraries like LitGPT and Axolotl incorporating this technique. Notably, Hugging Face’s library offers options to implement input masking through a specific dataset collator.

Research Findings

The paper “Instruction Tuning With Loss Over Instructions” questions the effectiveness of masking instructions during finetuning. Surprisingly, the study reveals that not masking instructions outperforms the masking approach, with results dependent on dataset length and size.

The study suggests that by not masking instructions, models may better manage responses when dealing with shorter responses or limited training examples, thereby reducing overfitting.

Analysis of Low-Rank Adaptation (LoRA)

Comparing LoRA with full finetuning across programming and mathematical domains, the study indicates that while LoRA may fall short in certain areas, it excels in preserving the base model’s capabilities outside targeted domains, offering stronger regularization.

Introduction of MoRA Method

A new method, MoRA, introduces a high-rank updating approach as an alternative to LoRA for parameter-efficient finetuning. The study demonstrates that MoRA performs slightly better than LoRA in continued pretraining scenarios, complementing LoRA in different aspects.

Final Thoughts

Ultimately, the research showcases the potential of the MoRA method in optimizing LLM performance across various tasks. While not yet a definitive replacement for LoRA, MoRA presents a compelling approach with promising results.

Thank you for your continued interest in the latest advancements in Large Language Models!